2 CROSS-SECTIONAL TECHNOLOGIES

2.1

Edge Computing and Embedded Artificial Intelligence

2.1.1.1 Introduction

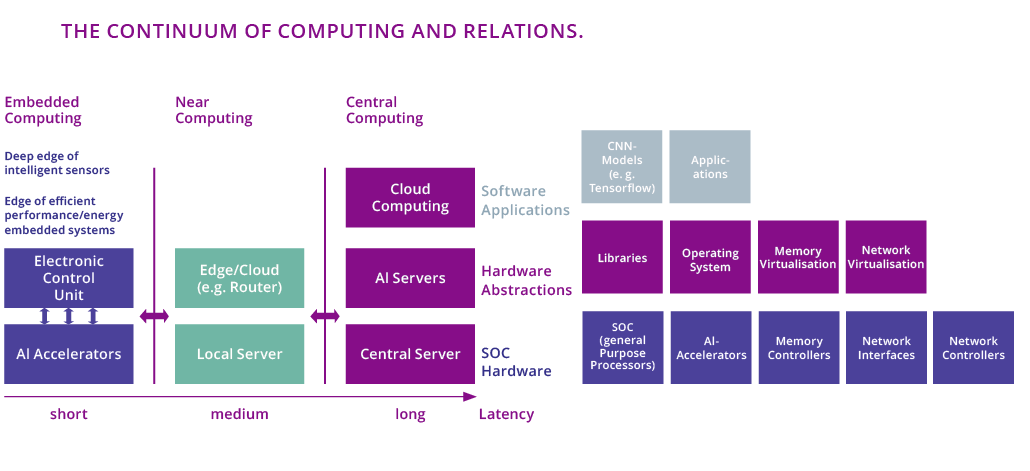

Our world is drastically changing with the deployment of digital technologies that provide ever increasing performance and autonomy to existing and new applications at a constant or decreasing cost but with a big challenge concerning energy consumption. Especially cyber-physical systems (CPS) place high demands on efficiency and latency. Distributed computing systems have diverse architectures and in addition tend to form a continuum between extreme edge, fog, mobile edge95 and cloud. Nowadays, many applications need computations to be carried out on spatially distributed devices, generally where it is most efficient. This trend includes edge computing, edge intelligence (e.g. Cognitive CPS, Intelligent Embedded Systems, Autonomous CPS) where raw data is processed close to the source to identify the insight data as early as possible bringing several benefits such as reduce latency, bandwidth, power consumption, memory footprint, and increase the security and data protection.

The introduction of Artificial Intelligence (AI) at the edge for data analytics brings important benefits for a multitude of applications. New advanced, efficient, and specialized processing architectures (based on CPU, embedded GPU, accelerators, neuromorphic computing, FPGA and ASICs) are needed to increase, for several orders of magnitude, the edge computing performances and to drastically reduce the power consumption.

One of the mainstream uses of AI is to allow an easier and better interpretation of the data (unstructured data such as image files, audio files, or environmental data) coming from the physical world. Being able to interpret data from the environment locally triggers new applications such as autonomous vehicles. The use of AI in the edge will contribute to automate complex and advanced tasks and represents one of the most important innovations being introduced by the digital transformation. Important examples are its contribution in the recovery from Covid-19 pandemic as well as its potential to ensure the required resilience in future crises96.

This Chapter focuses on computing components, and more specifically Embedded architectures, Edge Computing devices and systems using Artificial Intelligence at the edge. These elements rely on process technology, embedded software, and have constraints on quality, reliability, safety, and security. They also rely on system composition (systems of systems) and design and tools techniques to fulfil the requirements of the various application domains. ![]()

![]()

![]()

![]()

![]()

Furthermore, this Chapter focuses on the trade-off between performances and power consumption reduction, and managing complexity (including security, safety, and privacy97) for Embedded architectures to be used in different applications areas, which will spread Edge computing and Artificial Intelligence use and its contribution to the European sustainability.

2.1.1.2 Positioning edge and cloud solutions

The centralized cloud computing model, including data analysis and storage for the increasing number of devices in a network, is limiting the capabilities of many applications, creating problems regarding interoperability, latency and response time, connectivity, privacy, and data processing.

Another issue is dependability that creates the risk of a lack of data availability for different applications, a large cost in energy consumption, and the solution concentration in the hands of a few cloud providers that raise concerns related to data security and privacy.

The increased number of intelligent IoT devices provides new opportunities for enterprise data management, as the applications and services are moving the developments toward the edge and, therefore, from the IoT data generated and processed by enterprises, most of them could be processed at the edge rather than in the traditional centralized data centre in the cloud.

Edge Computing enhances the features and the capabilities (e.g., real-time) of IoT applications, embedded, and mobile processor landscape by performing data analytics through high-performance circuits using AI/ML techniques and embedded security. Edge computing allows the development of real-time applications, considering the processing is performed close to the data source. It can also reduce the amount of transmitted data by transforming an extensive amount of raw data into few insightful data with the benefits of decreasing communication bandwidth and data storage requirements, but also increasing security, privacy data protection, and reducing energy consumption. Moreover, edge computing provides mechanisms for distributing data and computing, making IoT applications more resilient to malicious events. Edge computing can also provide distributed deployment models to address more efficient connectivity and latency, solve bandwidth constraints, provide higher and more "specialized" processing power and storage embedded at the network's edge. Other benefits are scalability, ubiquity, flexibility, and lower cost.

In this Chapter, Edge Computing is described as a paradigm that can be implemented using different architectures built to support a distributed infrastructure of data processing (data, image, voice, etc.) as close as possible to the points of collection (data sources) and utilization. In this context, the edge computing distributed paradigm provides computing capabilities to the nodes and devices of the edge of the network (or edge domain) to improve the performance (energy efficiency, latency, etc.), operating cost, reliability of applications and services, and contribute significantly to the sustainability of the digitalization of the European society and economy. Edge computing performs data analysis by minimizing the distance between nodes and devices and reducing the dependence on centralized resources that serve them while minimizing network hops. Edge computing capabilities include a consistent operating approach across diverse infrastructures, the ability to perform in a distributed environment, deliver computing services to remote locations, application integration, orchestration. It also adapts service delivery requirements to the hardware performance and develops AI methods to address applications with low latency and varying data rates requirements – in systems typically subject to hardware limitations and cost constraints, limited or intermittent network connections.

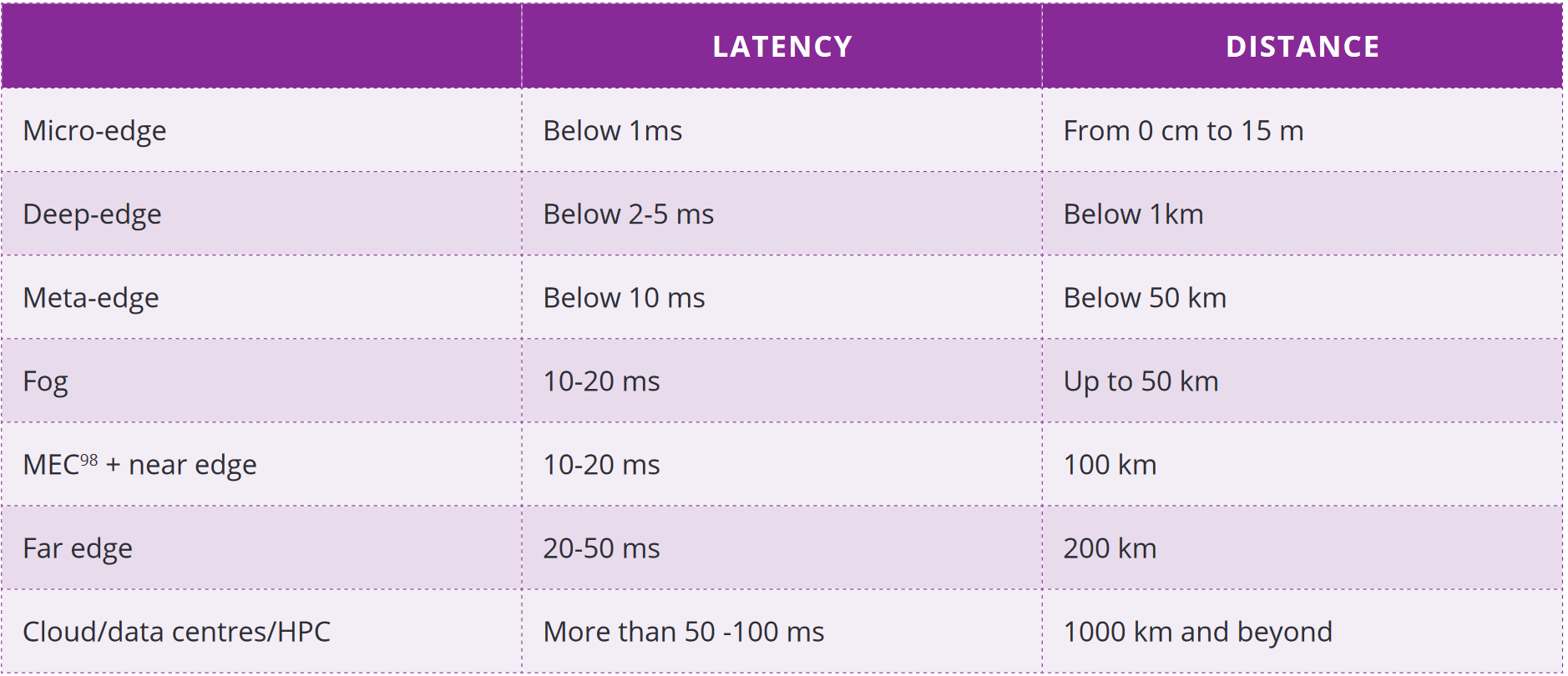

For intelligent embedded systems, the edge computing concept is reflected in the development of edge computing levels (micro, deep, meta, explained in the next paragraphs) that covers the computing and intelligence continuum from the sensors/actuators, processing, units, controllers, gateways, on-premises servers to the interface with multi-access, fog, and cloud computing.

A description of the micro, deep and meta edge concepts is provided in the following paragraphs (as proposed by the AIoT community).

The micro-edge describes intelligent sensors, machine vision, and IIoT devices that generate insight data and are implemented using microcontrollers built around processors architectures such as ARM Cortex M4 or recently RISC-V which are focused on minimizing costs and power consumption. The distance from the data source measured by the sensors is minimized. The compute resources process this raw data in line and produce insight data with minimal latency. The hardware devices of the micro-edge physical sensors/actuators generate from raw data insight data and/or actuate based on physical objects by integrating AI-based elements into these devices and running AI-based techniques for inference and self-training. ![]()

Intelligent micro-edge allows IoT real-time applications to become ubiquitous and merged into the environment where various IoT devices can sense their environments and react fast and intelligently with an excellent energy-efficient gain. Integrating AI capabilities into IoT devices significantly enhances their functionality, both by introducing entirely new capabilities, and, for example, by replacing accurate algorithmic implementations of complex tasks with AI-based approximations that are better embeddable. Overall, this can improve performance, reduce latency, and power consumption, and at the same time increase the devices usefulness, especially when the full power of these networked devices is harnessed – a trend called AI on edge.

The deep-edge comprises intelligent controllers PLCs, SCADA elements, connected machine vision embedded systems, networking equipment, gateways and computing units that aggregate data from the sensors/actuators of the IoT devices generating data. Deep edge processing resources are implemented with performant processors and microcontrollers such as Intel i-series, Atom, ARM M7+, etc., including CPUs, GPUs, TPUs, and ASICs. The system architecture, including the deep edge, depends on the envisioned functionality and deployment options considering that these devices cores are controllers: PLCs, gateways with cognitive capabilities that can acquire, aggregate, understand, react to data, exchange, and distribute information.

The meta-edge integrates processing units, typically located on-premises, implemented with high-performance embedded computing units, edge machine vision systems, edge servers (e.g., high-performance CPUs, GPUs, FPGAs, etc.) that are designed to handle compute-intensive tasks, such as processing, data analytics, AI-based functions, networking, and data storage.

This classification is closely related to the distance between the data source and the data processing, impacting overall latency. A high-level rough estimation of the communication latency and the distance from the data sources are as follows. Micro edge the latency is below 1millisecond (ms), and the distances from zero to max 15 meters (m), deep edge with distances under 1 km and latency below 2-5 ms, meta edge latencies under 10 ms and distances under 50 km, beyond 50 km the fog computing, MEC concepts are combined with near edge 10-20 ms and 100 km, far edge 20-50ms 500 km and cloud and data centres more than 50 ms and 1000 km.

Deployments "at the edge" can contribute, thanks to its flexibility to be adapted to the specific needs, to provide more energy-efficient processing solutions by integrating various types of computing architectures at the edge (e.g., neuromorphic, energy-efficient microcontrollers, AI processing units), reduce data traffic, data storage and the carbon footprint (one way to reduce the energy consumption is to know which data and why it is collected, which targets are achieved and optimize all levels of processes, both at hardware and software levels, to achieve those targets, and finally to evaluate what is consumed to process the data). Furthermore, edge computing reduces the latency and bandwidth constraints of the communication network by processing locally and distributing computing resources, intelligence, and software stacks among the computing network nodes and between the centralized cloud and data centres.

In general, the edge (in the peripheral of a global network as the Internet) includes compute, storage, and networking resources, at different levels as described above, that may be shared by several users and applications using various forms of virtualization and abstraction of the resources, including standard APIs to support interoperability.

More specifically, an edge node covers the edge computing, communication, and data analytics capabilities that make it smart/intelligent. An edge node is built around the computing units (CPUs, GPUs/FPGAs, ASICs platforms, AI accelerators/processing), communication network, storage infrastructure and the applications (workloads) that run on it.

The edge can scale to several nodes, distributed in distinct locations and the location and the identity of the access links is essential. In edge computing, all the nodes can be dynamic. They are physically separated and connected to each other by using wireless/wired connections in topologies such as mesh. The edge nodes can be functioning at remote locations and operates semi-autonomously using remote management administration tools.

The edge nodes are optimized based on the energy, connectivity, size, cost, and their computing resources are constrained by these parameters. In different application cases, it is required to provide isolation of edge computing from data centres in the cloud to limit the cloud domain interference and its impact on edge services.

Finally, the edge computing concept supports a dynamic pool of distributed nodes, using communication on partially unreliable network connections while distributing the computing tasks to resource-constrained nodes across the network.

2.1.1.3 Positioning Embedded Artificial Intelligence

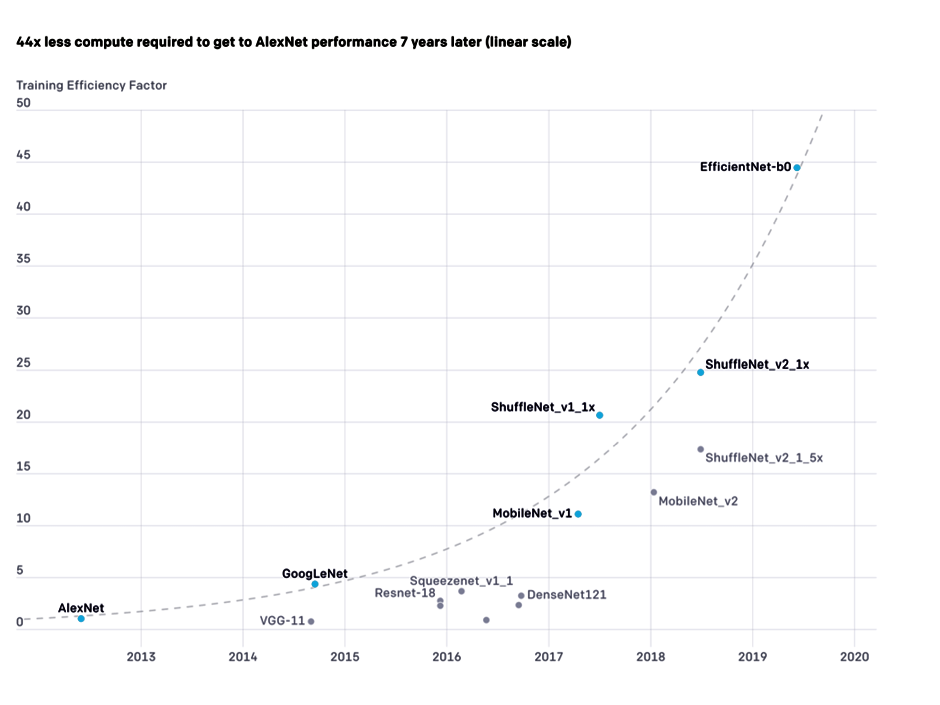

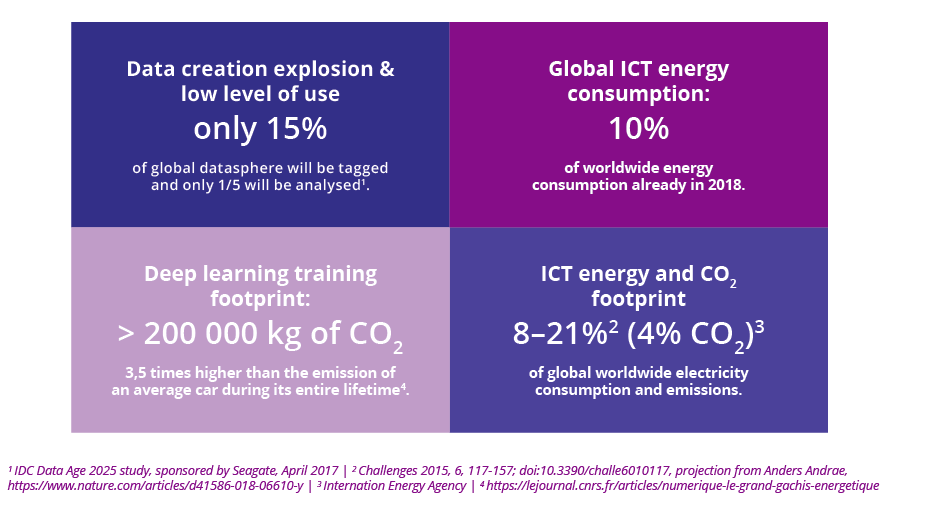

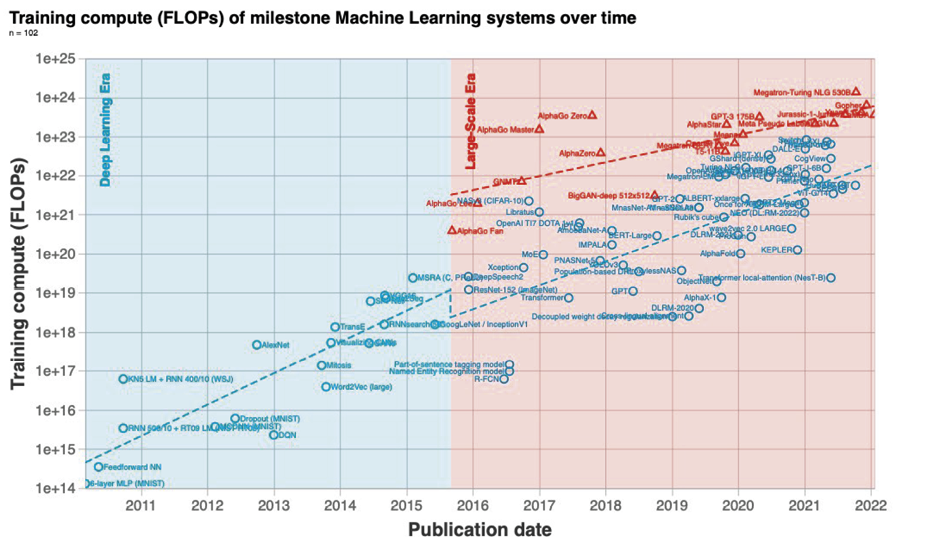

Thanks to the fast development in Machine Learning during the last decade, Artificial Intelligence is nowadays widely used. However, it demands huge quantity of data, especially for supervised learning using Deep Learning techniques, to get accurate results level. According to the application complexity, neuronal deep learning architectures are becoming more and more complex and demanding in terms of calculations time. As a result, the huge AI success, its perversive deployment and its computing costs, the worldwide energy consumed will be increased dramatically to levels that will be unsustainable in the near future. However, for a similar performance, due to increase of the efficiency of the algorithm and various quantization and pruning techniques, the computing and storage need tends to decrease over time. Complex tasks such as voice recognition which required models of 100 GB in the cloud are now reduced to less than half a gigabyte and can be run on local devices, such as smartphones.

Artificial Intelligence is a very efficient tool for several applications (e.g., image recognition and classifications, natural language understanding, complex manufacturing optimization, supply chain improvements, etc.) where pattern detection and process optimization can be done.

As a side effect, data collection is exploding with high heterogeneity levels, coming from numerous and very various sensors. On top, the bandwidth connecting data centres is limited and not all data need to be processed in the Cloud.

Naturally, systems are evolving from a centralized to a distributed architecture. Artificial Intelligence is, then, a crucial element that allows soft and optimized operation of distributed systems. Therefore, it is increasingly more embedded in the various network nodes even down to the very edge.

Such powerful tool allows Edge Computing to be more efficient in treating the data locally, while also minimizing the necessary data transmission to the upper network nodes. Another advantage of Embedded Artificial Intelligence is its capacity to self-learn and adapt to the environment through the data collected. Today’s learning techniques are still mostly based on supervised learning, but semi-supervised, self-supervised, unsupervised, or federative learning techniques are being developed.

At the same time, semiconductor technologies, hardware architectures, algorithms and software are being developed and industrialized to reduce memory size, time for data treatment and energy consumption, thus making Embedded AI an important pillar for Edge Computing. Tools for Embedded AI are also rapidly evolving leading to faster and easier implementation at all levels of the network.

2.1.1.4 Scope of the Chapter

The scope of this Chapter is to cover the hardware architectures and their realizations (Systems of Chip, Embedded architectures), mainly for edge and “near the user” devices such as IoT devices, cars, ICT for factories and local processing and servers. Data centres and electronic components for data centres are not the focus of the Chapter, except when the components can be used in local processing units or local servers (local clouds, swarm, fog computing, etc.). We therefore also cover this “edge” side of the “continuum of computing” and the synergies with the cloud. Hardware for HPC centres is also not the focus, even if the technologies developed for HPC systems are often found in high end embedded systems a few years (decades?) after. Each Section of this Chapter is split into 2 sub-Sections, from the generic to the more specific:

- Generic technologies for compute, storage, and communication (generic Embedded architectures technologies) and technologies that are more focused towards edge computing.

- Technologies focused for devices using Artificial Intelligence techniques (at the edge).

The technological aspects, at system level (PCB, assembly, system architecture, etc.), and embedded and application software are not part of this Chapter as they are covered in other Chapters. ![]()

![]()

Therefore, this Chapter shall cover mainly the elements foreseen to be used to compose AI or Edge systems:

- Processors with high energy efficiency,

- Accelerators (for AI and for other tasks, such as security),

- DPU (Data processing Unit, e.g., logging and collecting information for automotive and other systems) and processing data early (decreasing the load on processors/accelerators),

- Memories and associated controllers, specialized for low power and/or for processing data locally (e.g., using non-volatile memories such as PCRAM, CBRAM, MRAM for synaptic functions, and In/Near Memory Computing), etc.,

- Power management.

Of course, all the elements to build a SoC are also necessary, but not specifically in the scope of this Chapter:

- Security infrastructure (e.g., Secure Enclave) with placeholder for customer-specific secure elements (PUF, cryptographic IPsetc.). Security requirements are dealt with details in the corresponding Chapter.

- Field connectivity IPs (see connectivity Chapter, but the focus here is on field connectivity) (all kinds, wired, wireless, optical), ensuring interoperability.

- Integration using chiplet and interposer interfacing units will be detailed in the technology Chapter.

- And all other elements such as coherent cache infrastructure for many-cores, scratchpad memories, smart DMA, NoC with on-chip interfaces at router level to connect cores (coherent), memory (cache or not) and IOs (IO coherent or not), SerDes, high speed peripherals (PCIe controllers and switches, etc.), trace and debug hardware and low/medium speed peripherals (I2C, UART, SPI, etc.).

However, the Chapter will not detail the challenges for each of these elements, but only the generic challenges that will be grouped in 1) Edge computing and 2) Embedded Artificial Intelligence domains. In a nutshell the main recommendation is a paradigm shift towards distributed low power architectures/topologies:

- Distributed computing,

- and AI using distributed computing, leading to distributed intelligence.

2.1.1.5 State of the Art

This paragraph gives an overview of the importance that AI and embedded intelligence is playing in the sustainable development, the market perspectives for the AI components and the indication of some semiconductor companies providing components and key IPs.

Impact of AI and embedded intelligence in sustainable development

AI and particularly embedded intelligence, with its ubiquity and its high integration level having the capability “to disappear” in the environment (ambient intelligence), is significantly influencing many aspects of our daily life, our society, the environment, the organizations in which we work, etc. AI is already impacting several heterogeneous and disparate sectors, such as companies’ productivity99, environmental areas like nature resources and biodiversity preservation100, society in terms gender discrimination and inclusion101, 102, smarter transportation systems103, etc. just to mention a few examples. The adoption of AI in these sectors is expected to generate both positive and negative effects on the sustainability of AI itself, of the solutions based on AI and on their users104 105. It is difficult to extensively assess these effects and there is not, to date, a comprehensive analysis of their impact on sustainability. A recent study106 has tried to fill this gap, analyzing AI from the perspective of 17 Sustainable Development Goals (SDGs) and 169 targets internationally agreed in the 2030 Agenda for Sustainable Development107. From the study it emerges that AI can enable the accomplishment of 134 targets, but it may also inhibit 59 targets in the areas of society, education, health care, green energy production, sustainable cities, and communities.

From a technological perspective AI sustainability depends, at first instance, on the availability of new hardware108 and software technologies. From the application perspective, automotive, computing and healthcare are propelling the large demand of AI semiconductor components and, depending on the application domains, of components for embedded intelligence and edge AI. This is well illustrated by car factories on hold because of the current shortage of electronic components. Research and industry organizations are trying to provide new technologies that lead to sustainable solutions redefining traditional processor architectures and memory structure. We already saw that computing near, or in-memory, can lead to parallel and high-efficient processing to ensure sustainability.

The second important component of AI that impacts sustainability concerns software and involves the engineering tools adopted to design and develop AI algorithms, frameworks, and applications. The majority of AI software and engineering tools adopts an open-source approach to ensure performance, lower development costs, time to market, more innovative solutions, higher design quality and software engineering sustainability. However, the entire European community should contribute and share the engineering efforts at reducing costs, improving the quality and variety of the results, increasing the security and robustness of the designs, supporting certification, etc.

The report on “Recommendations and roadmap for European sovereignty on open-source hardware, software and RISC-V Technologies109” details these aspects in more details. ![]()

Sustainability through open technologies extends also to open data, rules engines110 and libraries. The publication of open data and datasets is facilitating the work of researchers and developers for ML and DL, with the existence of numerous images, audio and text databases that are used to train the models and become benchmarks111. Reusable open- source libraries112 allow to solve recurrent development problems, hiding the technical details and simplifying the access to AI technologies for developers and SMEs, maintaining a high-quality results, reducing time to market and costs.

Eventually, open-source initiatives (being so numerous, heterogeneous, and adopting different technologies) provide a rich set of potential solutions, allowing to select the most sustainable one depending on the vertical application. At the same time, open source is a strong attractor for applications developers as it gathers their efforts around the same kind of solutions for given use cases, democratizes those solutions and speeds up their development. However, some initiatives should be developed, at European level, to create a common framework to easily develop different types of AI architectures (CNN, ANN, SNN, etc.). This initiative should follow the examples of GAMAM (Google, Amazon, Meta, Apple, Microsoft). GAMAM have greatly understood its value and elaborated business models in line with open source, representing a sustainable development approach to support their frameworks113. It should be noted that Open-Source hardware should not only cover the processors and accelerators, but also all the required infrastructure IPs to create embedded architectures and to ensure that all IPs are interoperable and well documented, are delivered with a verification suite and remain maintained constantly to keep up with errata from the field and to incorporate newer requirements. The availability of automated SoC composition solutions, allowing to build embedded architectures design from IP libraries in a turnkey fashion, is also a desired feature to quickly transform innovation into PoC (Proof of Concept) and to bring productivity gains and shorter time-to-market for industrial projects. ![]()

![]()

The extended GAMAM and the BAITX also have large in-house databases required for the training and the computing facilities. In addition, almost all of them are developing their chips for DL (e.g., Google with its line of TPUs) or made announcement that they will. The US and Chinese governments have also started initiatives in this field to ensure that they will remain prominent players in the field, and it is a domain of competition.

It will be a challenge for Europe to excel in this race, but the emergence of AI at the edge, and its know-how in embedded systems, might be winning factors. However, the competition is fierce and the big names are in with big budgets and Europe must act quickly, because US and Chinese companies are already also moving in this "intelligence at the edge" direction (e.g. with Intel Compute Stick, Google's Edge TPU, Nvidia's Jetson Nano and Xavier, and multiples start-ups both in US and China, etc.).

Recently, the attention to the identification of sustainable computing solutions in modern digitalization processes has significantly increased. Climate changes and initiative like the European Green Deal114 are generating more sensitivity to sustainability topics, highlighting the need to always consider the technology impact on our planet, which has a delicate equilibrium with limited natural resources115. The computing approaches available today, as cloud computing, are in the list of the technologies that could potentially lead to unsustainable impacts. A recent study116 has clearly confirmed the importance that edge computing plays for sustainability but, at the same time, highlighted the necessity of increasing the emphasis on sustainability, remarking that “research and development should include sustainability concerns in their work routine” and that “sustainable developments generally receive too little attention within the framework of edge computing”. The study identifies three sustainability dimensions (societal, ecological, and economical) and proposes a roadmap for sustainable edge computing development where the three dimensions are addressed in terms of security/privacy, real-time aspects, embedded intelligence and management capabilities.

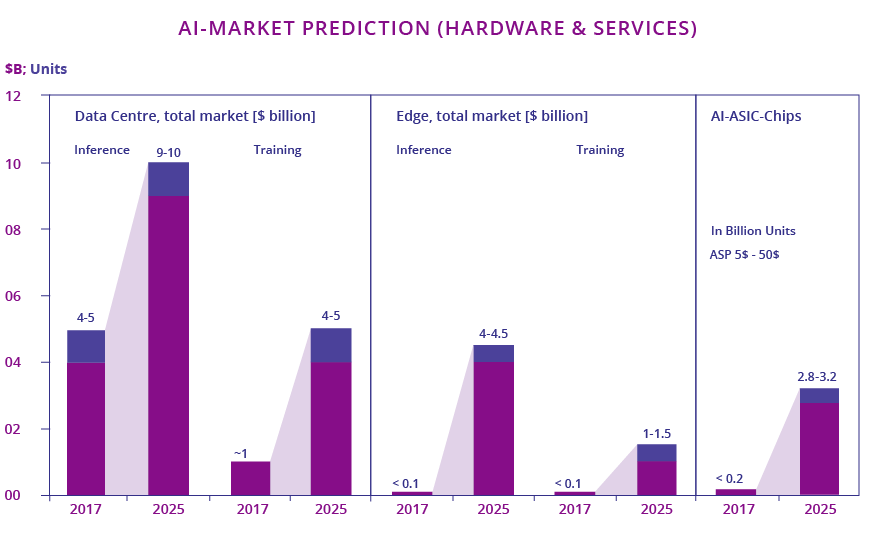

Market perspectives

Several market studies, although they don't give the same values, show the huge market perspectives for the AI use in the next years.

According to the ABI Research, it is expected that 1.2 billion devices capable of on-device AI inference will be shipped in 2023, with 70% of them coming from mobile devices and wearables. The market size for ASIC responsible for edge inference is expected to reach US$4.3 billion by 2024 including embedded architectures with integrated AI chipset, discrete ASIC, and hardware accelerators.

From another side, PWC expects that the market for AI-related semiconductors to grow to more than US$30 billions by 2022. The market for semiconductors powering inference systems will likely remain fragmented because potential use cases (e.g., facial recognition, robotics, factory automation, autonomous driving, and surveillance) will require tailored solutions. In comparison, training systems will be primarily based on traditional CPUs, GPUs, FPGAs infrastructures and ASICs.

According to McKinsey, it is expected by 2025 that AI-related semiconductors could account for almost 20 percent of all demand, which would translate into about $65 billion in revenue with opportunities emerging at both data centres and the edge.

According to a recent study, the global AI chip market was estimated to USD 9.29 billion in 2019 and it is expected to grow to USD 253.30 billion by 2030, with a CAGR of 35.0% from 2020-2030.

AI components vendors

In the next few years, the hardware is serving as a differentiator in AI, and AI-related components will constitute a significant portion of future demand for different applications.

Qualcomm has launched the fifth generation Qualcomm AI Engine, which is composed of Qualcomm Kyro Central Processing Unit (CPU), Adreno Graphics Processing Unit (GPU), and Hexagon Tensor Accelerator (HTA). Developers can use either CPU, GPU, or HTA in the AI Engine to carry out their AI workloads. Qualcomm launched also Qualcomm Neural Processing Software Development Kit (SDK) and Hexagon NN Direct to facilitate the quantization and deployment of AI models directly on Hexagon 698 Processor.

Huawei and MediaTek incorporate their embedded architectures into IoT gateways and home entertainment, and Xilinx finds its niche in machine vision through its Versal ACAP SoC. NVIDIA has advanced the developments based on the GPU architecture, NVIDIA Jetson AGX platform a high performance SoC that features GPU, ARM-based CPU, DL accelerators and image signal processors. NXP and STMicroelectronics have begun adding Al HW accelerators and enablement SW to several of their microprocessors and microcontrollers.

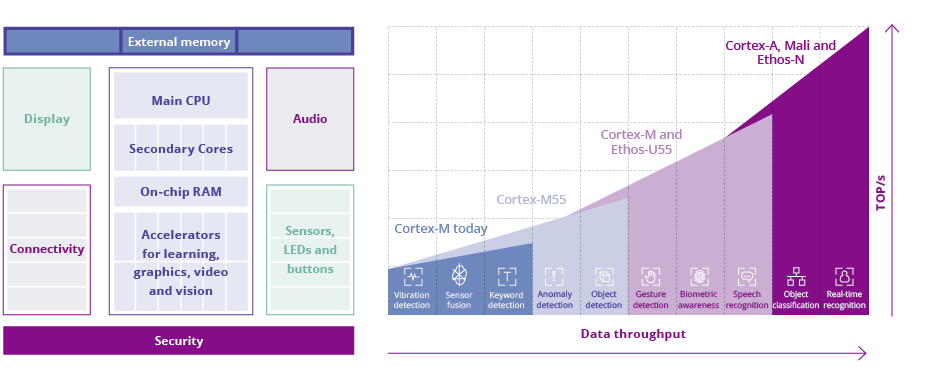

ARM is developing the new Cortex-M55 core for machine learning applications and used in combination with the Ethos-U55 AI accelerator. Both are designed for resource-constrained environments. The new ARM’s cores are designed for customized extensions and for ultra-low power machine learning.

Open-source hardware, championed by RISC-V, will bring forth a new generation of open-source chipsets designed for specific ML and DL applications at the edge. French start-up GreenWaves is one of European companies using RISC-V cores to target the ultra-low power machine learning space. Its devices, GAP8 and GAP9, use 8- and 9-core compute clusters, the custom extensions give its cores a 3.6x improvement in energy consumption compared to unmodified RISC-V cores. ![]()

Driven by Moore‘s Law over the last 40 years117, computing and communication brought important benefits to society. Complex computations in the hands of users and hyper-connectivity have been at the source of significant innovations and improvements in productivity, with a significant cost reduction for consumer products at a global level, including products with a high electronic content, traditional products (e.g., medical and machinery products) and added value services.

Computing is at the heart of a wide range of fields by controlling most of the systems with which humans interact. It enables transformational science (Climate, Combustion, Biology, Astrophysics, etc.), scientific discovery and data analytics. But the advent of Edge Computing and of AI on the edge, enabling complete or partially autonomous cyber-physical systems, requires tremendous improvements in terms of semantics and use case knowledge understanding, and of new computing solutions to manage it. Even if deeply hidden, these computing solutions directly or indirectly impact our ways of life: consider, for example, their key role in solving the societal challenges listed in the application Chapters, in optimizing industrial processes costs, in enabling the creation of cheaper products (e.g., delocalized healthcare). ![]()

![]()

They will also enable synergies between domains: e.g., self-driving vehicles with higher reliability and predictability will directly benefit medical systems, consumer smart bracelets or smart watches for lifestyle monitoring reduce the impact of health problems118 with a positive impact on the healthcare system costs, first-aid and insurance services are simplified and more effective thanks to cars location and remote-control functionalities.

These computing solutions introduce new security improvements and threats. Edge Computing allows a better protection of personal data, being stored, and processed only locally, and this ensures the privacy rights required by GDPR. But at the same time, the easy accessibility to the devices and new techniques, like AI, generates a unique opportunity for hackers to develop new attacks. It is, then, paramount to find interdisciplinary trusted computing solutions and develop appropriate counter measures to protect them in case of attacks. For example, Industry 4.0 and forthcoming Industry 5.0119 requires new architectures that are more decentralized, new infrastructures and new computational models that satisfy high level of synchronization and cooperation of manufacturing processes, with a demand of resources optimization and determinism that cannot be provided by solutions that rely on “distant” cloud platforms or data centres120, but that can ensure low-latency data analysis, that are extremely important for industrial application121.

These computing solutions have also to consider the man in the loop: especially with AI, solutions ensuring a seamless connection between man and machine will be a key factor. Eventually, a key challenge is to keep the environmental impact of these computing solutions under control, to ensure the European industry sustainability and competitiveness.

The following figure illustrates an extract of the challenges and expected market trend of Edge Computing and AI at the edge:

AI introduces a radical improvement to the intelligence brought to the products through microelectronics and could unlock a completely new spectrum of applications and business models. The technological progress in microelectronics has increased the complexity of microelectronic circuits by a factor of 1000 over the last 10 years alone, with the integration of billions of transistors on a single microchip. AI is therefore a logical step forward from the actual microelectronics control units and its introduction will significantly shape and transform all vertical applications in the next decade.

AI and Edge Computing have become core technologies for the digital transformation and to drive a sustainable economy. AI will allow to analyse data on the level of cognitive reasoning to take decisions locally on the edge (embedded artificial intelligence), transforming the Internet of Things (IoT) into the Artificial Intelligence of Things (AIoT). Likewise, control and automation tasks, which are traditionally carried out on centralized computer platforms will be shifted to distributed computing devices, making use of e.g., decentralized control algorithms. Edge computing and embedded intelligence will allow to significantly reduce the energy consumption for data transmissions, will save resources in key domains of Europe’s industrial systems, will improve the efficient use of natural resources, and will also contribute to improve the sustainability of companies.

Technologies allowing low power solutions are almost here. What is now key is to integrate these solutions as close as possible to the production of data and sensors.

The key issues to the digital world are the availability of affordable computing resources and transfer of data to the computing node with an acceptable power budget. Computing systems are morphing from classical computers with a screen and a keyboard to smart phones and to deeply embedded systems in the fabric of things. This revolution on how we now interact with machines is mainly due to the advance in AI, more precisely of machine learning (ML) that allows machines to comprehend the world not only on the basis of various signal analysis but also on the level of cognitive sensing (vision and audio). Each computing device should be as efficient as possible and decrease the amount of energy used.

Low-power neural network accelerators will enable sensors to perform online, continuous learning and build complex information models of the world they perceive. Neuromorphic technologies such as spiking neural networks and compute-in-memory architectures are compelling choices to efficiently process and fuse streaming sensory data, especially when combined with event-based sensors. Event based sensors, like the so-called retinomorphic cameras, are becoming extremely important especially in the case of edge computing where energy could be a very limited resource. Major issues for edge systems, and even more for AI-embedded systems, is energy efficiency and energy management. Implementation of intelligent power/energy management policies are key for systems where AI techniques are part of processing sensor data and power management policies are needed to extend the battery life of the entire system.

As extracting useful information should happen on the (extreme) edge device, personal data protection must be achieved by design, and the amount of data traffic towards the cloud and the edge-cloud can be reduced to a minimum. Such intelligent sensors not only recognize low-level features but will be able to form higher level concepts as well as require only very little (or no) training. For example, whereas digital twins currently need to be hand-crafted and built bit-for-bit, so to speak, tomorrow’s smart sensor systems will build digital twins autonomously by aggregating the sensory input that flows into them.

To achieve intelligent sensors with online learning capabilities, semiconductor technologies alone will not suffice. Neuroscience and information theory will continue to discover new ways122 of transforming sensory data into knowledge. These theoretical frameworks help model the cortical code and will play an important role towards achieving real intelligence at the extreme edge.

AI systems use the training and inference for providing the proper functions of the system, and they have significant differences in terms of computing resources provided by the AI chips. Training is based on past data using datasets that are analysed, and the findings/patterns are built into the AI algorithm. Current hardware used for training needs to provide computation accuracy, support sufficient representation accuracy, e.g. floating-point or fixed-point with long word-length, large memory bandwidth, memory management, synchronization techniques to achieve high computational efficiency and fast write time and memory access to a large amount of data123. However, recent research points to increasing training potential for complex CNN models even on constrained edge devices.124

Reinforcement learning (RL) is a booming area of machine learning and is based on how agents ought to take actions in an environment in order to maximize the notion of cumulative reward. Recent work125 develops systems that were able to discover their own reward function from scratch. Similarly, Auto-ML allows to determine a “good” structure for a DL system to be efficient in a task. But all those approaches are also very compute demanding.

New deep learning models are introduced at an increasing rate and one of the recent one, with large applications potential, are transformers. Based on the attention model126, it is a “sequence-to-sequence architecture” that transforms a given sequence of elements into another sequence. Initially used for NLP (Natural Language Processing) where it can translate one sequence in a first language into another one, or complement the beginning of a text with potential follow-up, it is now extended to other domains such a video processing. It is also a self-supervised approach: for learning it does not need labelled examples, but only part of the sequence, the remaining part being the “ground truth”. The biggest models, such as GPT3, are based on this architecture. GPT3 was in the highlight in May 2020 because of its potential use in many different application (the context being given by the beginning sequence) such as generating new text, summarizing text, translating language, answering to questions and even generating code from specifications. Even if today transformers are mainly used for cloud applications, this kind of architecture will certainly ripple down in embedded systems in the future. For example, Meta OPT, released in May 2022, has 1/7 of the CO2 footprint of GPT3 with similar performances. The new GPUs of Nvidia, supports float8 in order to efficiently implement transformers.

The inference is the application of the learned algorithm to the real devices to solve specific problems based on present data. The AI hardware used for inference needs to provide high speed, energy efficiency, low cost, fixed-point representation, efficient reading memory access and efficient network interfaces for the whole hardware architecture. The development of AI-based devices with increased performance, and energy efficiency allows the AI inference "at the edge" (embedded intelligence) and accelerates the development of middleware allowing a broader range of applications to run seamlessly on a wider variety of AI-based circuits. Companies like Google, Gyrfalcon, Mythic, NXP, STMicroelectronics and Syntiant are developing custom silicon for the edge. As an example, Google was releasing Edge TPU, a custom processor to run TensorFlow Lite models on edge devices. NVidia is releasing the Jetson Orin Nano range of products, allowing to perform up to 40 TOPS of sparce neural networks within a 15W power range127.

The Tiny ML community (https://www.tinyml.org/) is bringing Deep Learning to microcontrollers with limited resources and at ultra-low energy budget. The MLPerf allows to benchmark devices on similar applications (https://github.com/mlcommons/tiny), because it is nearly impossible to compare performances on figures given by chips providers.

In summary we see the following disruptions on the horizon, once embedded AI enters the application space broadly:

- Various processing, especially concerning AI functionalities, are moved to local devices, such as voice and environment recognition, allowing privacy preserving functionalities.

- The latent intelligence of things will be enabled by Al.

- Federated functionalities will emerge (increasing the functionality of a device by using capabilities, resources, or neighbouring devices).

- Connected functionalities will also show up: this will extend the control and automation of a single system (e.g., a truck, a car) to a network of systems (e.g., a truck platoon), resulting in networked control of a cyber-physical system. The benefit of this is generally better performance and safety. It will also set the foundation for autonomous machines (including vehicles).

- The detection of events by camera and other long-range sensors (radar, lidar, etc.) is coming into action. Retina sensors will ensure low power operation of the system. Portable devices for blind people will be developed.

- The possibilities for disabled people to move their arms and legs comes into reach, as AI-conditioned sensors will directly be connected to the brain.

- The use of conversational interfaces will be drastically increased, improving the human machine interface with reliable understanding of natural language.

Edge computing and Embedded Artificial Intelligence are key enablers for the future, and Europe should act quickly to play a global role and have a certain level of control of the assets we use in Europe. Further development of AI can be a strategic advantage for Europe, but we are not in a leading position.

Already today AI is being used as a strategic competitive advantage. Tesla is the first car company which is marketing a driving-assistance-system as “auto-pilot”. Although it is not qualified to operate without human intervention, it is a significant step forward towards autonomous driving. Behind this feature is one of the strongest AI-processors, which can be found in driver assistance systems. However, the chips employed are not freely available on the market but are exclusive for Tesla and they are developed internally now to train their self-learning capabilities. This example clearly shows the importance of system ownership in AI, which must be secured for Europe, if its companies want to be able to sell competitive products when AI is becoming pervasive.

In this context, Europe must secure the knowledge to build AI-systems, design AI-chips, procure the AI-software ecosystem, and master the integration task into its products, and particularly into those products where Europe has a lead today.

Adapted to the European industry structure, which is marked by a vibrant and versatile ecosystem of SMEs together with larger firms, we need to build and enhance the AI-ecosystem for the particular strengths but also weaknesses of Europe.

A potential approach could be to:

- To rely on existing application domains where we are strong (e.g. automotive, machinery, chemistry, energy, etc.).

- Promoting to keep, catch-up and get all expertise in Europe that are required to build competitive Edge Computing systems and Embedded Intelligence, allowing us to develop solutions that are adapted to the European market and beyond. All the knowledge is already present in Europe, but not structured and focused and often the target of non-European companies. The European ecosystem is rich and composed of many SMEs, but with little focus on common goals and cooperation.

- Open-source Hardware can be an enabler or facilitator of this evolution, allowing this swarm of SMEs to develop solutions more adapted to the diversity of the market.

- Data-based and knowledge-based modelling combined into hybrid modelling is an important enabler.

- Particular advantage will be cross-domain and cross-technology cooperation between various European vendors combining the best hardware and software know-how and technologies.

- Cooperation along and across value chains for both hardware and software experts will be crucial in the field of smart systems and the AI and IoT community.

While Europe is recognized for its know-how in embedded systems architecture and software, it should continue to invest in this domain to remain at the state of the art, despite fierce competition from countries like USA, China, India, etc. From this perspective, the convergence between AI and Edge Computing, what we call

European companies are also in the lead for embedded microcontrollers. Automotive, IoT, medical applications and all embedded systems utilize many low-cost microcontrollers, integrating a complete system, computing, memory, and various peripherals in a single die. Here, pro-active innovation is necessary to upgrade the existing systems with the new possibilities from AI, Cyber-Physical Systems and Edge computing, with a focus on local AI. Those new applications will require more processing power to remain competitive. In addition, old applications will require AI-components to remain competitive. But power dissipation must not increase accordingly, in fact a reduction would be required. Europe has lost some ground in the processor domain, but AI is also an opportunity to regain parts of its sovereignty in the domain of computing, as completely new applications emerge. Mastering key technologies for the future is mandatory to enforce Europe, and for example, to attract young talents and to enables innovations for the applications.

Europe no longer has a presence in "classical" computing such as processors for laptops and desktop, servers (cloud) and HPC, but the drive towards Edge Computing, part of a computing continuum, might be an opportunity to use the solid know-how in embedded systems and extend it with high performance technology to create Embedded (or Edge) High Performance Computers (eHPC) that can be used in European meta-edge devices. The initiative of the European Commission, "for the design and development of European low-power processors and related technologies for extreme-scale, high-performance big-data and emerging applications, in the automotive sector" could reactivate an active presence of Europe in that field and has led to the launch of the "European Processor Initiative – EPI ". New initiatives around RISC-V and Open-source hardware are also key ingredients to keep Europe in the race.

AI optimized hardware components such as CPUs, GPUs, DPUs, FPGAs, ASICs accelerators and neuromorphic processors are becoming more and more important. European solutions exist, and the knowledge on how to build AI-systems is available mainly in academia. However, more EU action is needed to bring this knowledge into real products in view to enhance the European industry with its strong incumbent products. Focused action is required to extend the technological capabilities and to secure Europe’s industrial competitiveness. A promising approach to prevent the dependence on closed processing technologies, relies on Open Hardware initiatives (Open Compute Project, RISC-V, OpenCores, OpenCAPI, etc.). The adoption of an open ecosystem approach, with a globally and incrementally built know-how by multiple actors, prevents that a single entity can monopolize the market or cease to exist for other reasons. The very low up-front cost of open hardware/silicon IP lowers the barrier of innovation for small players to create, customize, integrate, or improve Open IP to their specific needs. Thanks to Open Hardware freely shared, and to existing manufacturing capabilities that still exist in Europe, prototyping facilities and the related know-how, a new wave of European start-ups could come to existence, building on top of existing designs and creating significant value by adding the customization needed for industries such as automotive, energy, manufacturing or health/medical. Another advantage of Open-Source hardware is that the source code is auditable and therefore inspected to ensure quality (and less prone to attack if correctly analysed and corrected). ![]()

In a world, in which some countries are more and more protectionist, not having high-end processing capabilities, (i.e., relying on buying them from countries out of Europe) might become a weakness (leaving for example the learning/training capabilities of AI systems to foreign companies/countries). China, Japan, India, and Russia are starting to develop their own processing capabilities in order to prevent potential shortage or political embargo.

It is also very important for Europe to master the new key technologies for the future, such as AI, the drive for more local computing, not only because it will allow to sustain the industry, but also master the complete ecosystem of education, job creation and attraction of young talents into this field while implementing rapidly new measures as presented in Major Challenge 4.

2.1.5.1 For Edge Computing

Four Major Challenges have been identified for the further development of computing systems, especially in the field of embedded architectures and Edge Computing:

- Increasing the energy efficiency of computing systems:

- Managing the increasing complexity of systems:

- Supporting the increasing lifespan of devices and systems:

- HW supporting software upgradability.

- Improving interoperability (with the same class of application) and between classes, modularity, and complementarity between generations of devices.

- Developing the concept of 2nd life for components.

- Implementation on the smallest devices, high quality data, meta-learning, neuromorphic computing, and other novel hardware-architectures.

- Ensuring European sustainability in Embedded architectures design:

2.1.5.2 For Embedded Intelligence

The world is more and more connected. Data collection is exploding. Heterogeneity of data and solutions, needs of flexibility in calculation between basic sensors and multiple sensors with data fusion, protection of data and systems, extreme variety of use cases with different data format, connectivity, bandwidth, real time or not, etc. increase the complexity of systems and their interactions. This leads to systems of systems solutions, distributed between deep edge to cloud and possibly creating a continuum in this connected world. ![]()

Ultimately, energy efficiency becomes the key criteria as digital world is taking a more and more significant percentage of produced electricity.

Embedded Intelligence is then foreseen as a crucial element to allow a soft and optimized operation of distributed systems. It is a powerful tool to achieve goals such as:

- Power energy efficiency by treating data locally and minimizing the necessary data sent to the upper node of network.

- Securing the data (including privacy) keeping them local.

- Allowing different systems to communicate to each other and adapt over the time (increasing their lifetime).

- Increasing resilience by learning and becoming more secured, more reliable.

- Keeping systems always on and accessible towards a network continuum.

On top, Embedded Intelligence can be installed to all levels of the chain. However, many challenges must be solved to achieve those goals.

First priority is the energy efficiency. The balance between Embedded AI energy consumption and overall energy savings must be carefully reviewed. New innovative architectures and technologies (Near-Memory-Computing, In-Memory-Computing, Neuromorphic, etc.) need to be developed as well as sparsity of coding and of the algorithm topology (e.g., for Deep Neural Network). It also means to carefully choose which data is collected and for which purposes. Avoiding data transfers is also key for low power: Neural Networks, where storage (the synaptic weights) and computing (the neurons) are closely coupled lead to architectures which may differ from the Von Neumann model where storage and computation are clearly separated. Computing In or Near memory are efficient potential architectures for some AI algorithms.

Second, Embedded AI must be scalable and modular all along the distributed chain, increasing flexibility, resilience, and compatibility. Stability between systems must be achieved and tested. Thus, benchmark and validation tools for Embedded AI and related techniques have to be developed.

Third, self-learning techniques (Federative learning, unsupervised learning, etc.) will be necessary for fast and automatic adaptation.

Finally, trust in AI is key for societal acceptance. Explainability and Interpretability of AI decisions for critical systems are important factors for AI adoption, together with certifications processes.

Algorithms for Artificial Intelligence can be realized in stand-alone, distributed (federated, swarm, etc.) or centralized solution (of course, not all algorithms can be efficiently implemented in the 3 solutions). For energy, privacy and all the reasons explained above, it is preferable to have stand-alone or distributed solutions (hence the name “Intelligence at the edge”). The short term might be more oriented towards stand-alone AI (e.g., self-driving car) and then distributed (or connected, like car2car or car2infrastructure).

Summarizing, four Major Challenges have been identified:

- Increasing the energy efficiency:

- Development of innovative (and heterogeneous) hardware architectures: e.g. Neuromorphic.

- Avoiding moving large quantities of data: processing at the source of data, sparse data coding, etc. Only processing when it is required (sparse topology, algorithms, etc.).

- Interoperability (with the same class of application) and between classes.

- Scalable and Modular AI.

- Managing the increasing complexity of systems:

- Development of trustable AI.

- Easy adaptation of models.

- Standardized APIs for hardware and software tool chains, and common descriptions to describe the hardware capabilities.

- Supporting the increasing lifespan of devices and systems:

- Realizing self-X (unsupervised learning, transfer learning, etc.).

- Update mechanisms (adaptation, learning, etc.).

- Ensuring European sustainability in AI:

- Developing solutions that correspond to European needs and ethical principles.

- Transforming European innovations into commercial successes.

- Cultivating diverse skillsets and expertise to address all parts of the European embedded AI ecosystem.

Of course, as seen above, all the generic challenges found in Embedded architectures are also important for Embedded AI based systems, but we will describe more precisely which is specific for each subsection (Embedded architectures/Edge computing and Embedded Intelligence).

2.1.5.3 Major Challenge 1: Increasing the energy efficiency of computing systems

State of the art

The advantages of using digital systems should not be hampered by their cost in terms of energy. For HPC or data centres, it is clear that the main challenge is not only to reach the “exaflops”, but to reach “exaflops” at reasonable energy cost, which impacts the cooling infrastructure, the size of the “power plug” and globally the cost of ownership. At the other extremity of the spectrum, micro-edge devices should work for months on a small battery, or even by scavenging their energy from the environment (energy harvesting). Reducing the energy footprint of devices is the main charter for fulfilling sustainability and the “European Green deal”. Multimode energy harvesting (e.g., solar/wind, regenerative braking, dampers/shock absorbers, thermoelectric, etc.) offers huge potential for electrical vehicles and other battery-, fuel cells -operated vehicles in addition to energy efficiency design, real-time sensing of integrity, energy storage and other functions.

Power consumption should not be only seen just at the level of the device, but at the level of the aggregation of functions that are required to fulfil a task.

The new semiconductor technology nodes don’t really bring improvement on the power per device, Dennard’s scaling is ending and going to a smaller node does not anymore lead to a large increase of the operating frequency or a decrease of the operating voltage. Therefore, dissipated energy per surface, the power density of devices is increasing rather than decreasing. Transistor architectures, such as FinFet, FDSOI, GAA, nanosheets mainly reduce the leakage current (i.e., the energy spent by an inactive device). However, transistors made on FDSOI substrates achieve the same performance than FinFet transistors at a lower operating voltage, reducing dynamic power consumption. ![]()

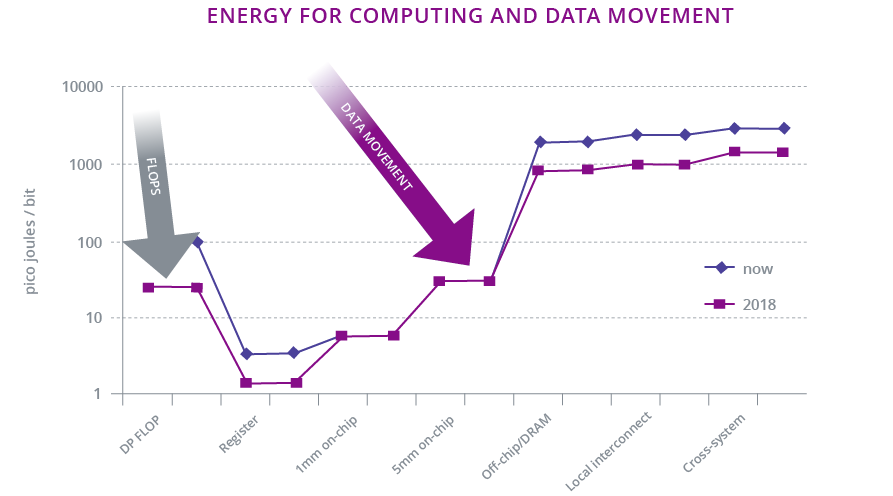

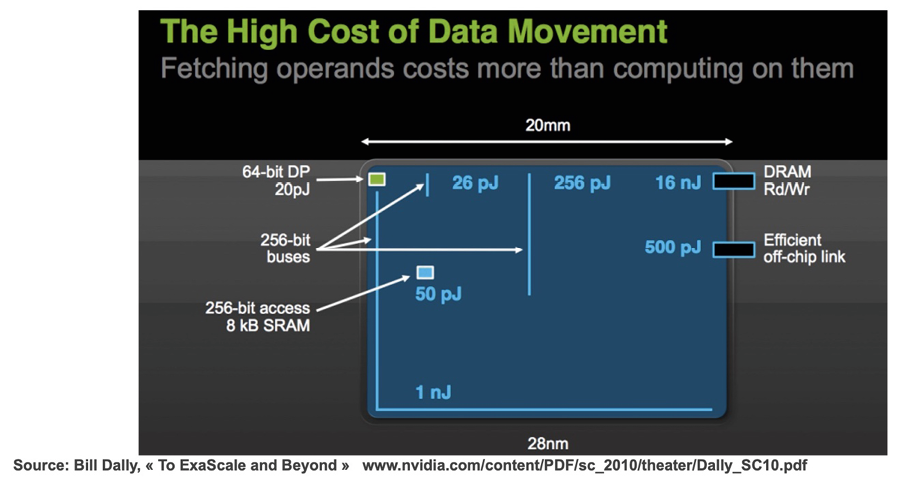

In addition, comes the memory wall. Today's limitation is not coming from the pure processing power of systems but more from the capacity to bring data to the computing nodes within a reasonable power budget fast enough.

Furthermore, the system memory is only part of a broader Data Movement challenge which requires significant progress in the data access/storage hierarchy from registers, main memory (e.g. progress of NVM technology, such as the Intel’s 3D-xpoint, etc.), to external mass storage devices (e.g. progress in 3D-nand flash, SCM derived from NVM, etc.). In a modern system, large parts of the energy are dissipated in moving data from one place to another. For this reason, new architectures are required, such as computing in or near memory, neuromorphic architectures (also where the physics of the NVM - PCM, CBRAM, MRAM, OXRAM, ReRAM, FeFET, etc. - technology can be used to compute ) and lower bit count processing are of primary importance.

Power consumption can be reduced by local treatment of collected data, not only at circuit level, but also at system level or at least at the nearest from the sensors in the chain of data transfer towards the data centre (for example: in the gateway). Whereas the traditional approach was to have sensors generate as much data as possible and then leave the interpretation and action to a central unit, future sensors will evolve from mere data-generating devices to devices that generate semantic information at the appropriate conceptual level. This will obviate the need for high bit rates and thus power consumption between the sensors and the central unit. In summary, raw data should be transformed into relevant information (what is useful) as early as possible in the processing continuum to improve the global energy efficiency:

- Only end or middle points equipment are working, potentially with low or sleeping consumption modes.

- Data transfer through network infrastructures is reduced. Only necessary data is sent to the upper level.

- Usage of computing time in data centres is also minimized.

- The development of benchmarks and standardization for HW/SW and data sets could be an appropriate measure to reduce power consumption. Hence, energy consumption evaluation will be easy and include the complete view from micro-edge to cloud.

Key focus areas

To increase the energy efficiency of computing systems, especially in the field of systems for AI and Edge Computing requires the development of innovative hardware architectures at all levels with their associated software architectures and algorithms:

- At technology level (FinFet, FDSOI, silicon nanowires or nanosheets), technologies are pushing the limits to be Ultra-low power. On top, advanced architectures are moving from Near-Memory computing to In-Memory computing with potential gains of 10 to 100 times. Technologies related to advanced integration and packaging have also recently emerged (2.5D, chiplets, active interposers, etc.) that open innovative design possibilities, particularly for what concerns tighter sensor-compute and memory-compute integration.

- At device level, several type of circuit architectures are currently running, tested, or developed worldwide. The list is moving from the well-known CPU to some more and more dedicated accelerators integrated in Embedded architectures (GPU, DPU, TPU, NPU, DPU, etc.) providing accelerated data processing and management capabilities, which are implemented very variously going from fully digital to mixed or full analog solutions:

- Fully digital solutions have addressed the needs of emerging application loads such as AI/DL workloads using a combination of parallel computing (e.g., SMP and GPU) and accelerated hardware primitives (such as systolic arrays), often combined in heterogeneous Embedded architectures. Low-bit-precision (8-bit integer or less) computation as well as sparsity-aware acceleration have been shown as effective strategies to minimize the energy consumption per each elementary operation in regular AI/DL inference workloads; on the other hand, there remain many challenges in terms of hardware capable of opportunistically exploiting the characteristics of more irregular mixed-precision networks. Applications, including AI/DL also require further development due to their need for more flexibility and precision in numerical representation (32- or 16-bit floating point), which puts a limit to the amount of hardware efficiency that can be achieved on the compute side.

- Avoiding moving data: this is crucial because the access energy of any off-chip memory is currently 10-100x more expensive than access to on-chip memory. Emerging non-volatile memory technologies such as MRAM, with asymmetric read/write energy cost, could provide a potential solution to relieve this issue, by means of their greater density at the same technology node. Near-Memory Computing (NMC) and In-Memory Computing (IMC) techniques move part of the computation near or inside memory, respectively, further offsetting this problem. While IMC in particular is extremely promising, careful optimization at the system level is required to really take advantage of the theoretical peak efficiency potential.

- Another way is also to perform invariant perceptive processing and produce semantic representation with any type of sensory inputs.

- At system level, micro-edge computing near sensors (i.e., integrating processing inside or very close to the sensors or into local control) will allow embedded architectures to operate in the range of 10 mW (milliwatt) to 100 mW with an estimated energy efficiency in the order of 100s of GOPs/Watt up to a few TOPs/Watt in the next 5 years. This could be negligible compared to the consumption of the sensor (for example, a Mems microphone can consume a few mA). On top, the device itself can go in standby or in sleep mode when not used, and the connectivity must not be permanent. Devices currently deployed on the edge rarely process data 24/7 like data centres: to minimize global energy, a key requirement for future edge Embedded architectures is to combine high performance “nominal” operating modes with lower-voltage high compute efficiency modes and, most importantly, with ultra-low-power sleep states, consuming well below 1 mW in fully state-retentive sleep, and less than 1-10 µW in deep sleep. The possibility to leave embedded architectures in an ultra-low power state for most of the time has a significant impact on the global energy consumed. The possibility to orchestrate and manage edge devices becomes fundamental from this perspective and should be supported by design. On the contrary, data servers are currently always on even if they are loaded only at 60% of their computing capability.

- At data level, memory hierarchies will have to be designed considering the data reuse characteristics and access patterns of algorithms, which strongly impact load and store access rate and hence, the energy necessary to access each memory in the hierarchy. For example (but not only), weights and activations in a Deep Neural Network have very different access patterns and can be deployed to entirely separate hierarchies exploiting different combinations of external Flash, DRAM, non-volatile on-chip memory (MRAM, FRAM, etc.) and SRAM.

- At tools level, HW/SW co-design of system and their associated algorithms are mandatory to minimize the data moves and optimally exploit hardware resources, particularly if accelerators are available, and thus optimize the power consumption.

State of the art

Training AI models can be very energy demanding. As an example, according to a recent study129, the model training process for natural-language processing (NLP, that is, the sub-field of AI focused on teaching machines to handle human language) could end emitting as much carbon as five cars in their lifetimes130. However, if the inference of that trained model is executed billions of times (e.g., by billion users' smartphones), its carbon footprint could even offset the training one. Another analysis131, published by the OpenAI association, unveils a dangerous trend: "since 2012, the amount of compute used in the largest AI training runs has been increasing exponentially with a 3.5 month-doubling time (by comparison, Moore's law had a 2-years doubling period)". These studies reveal that the need for computing power (and associated power consumption) for training AI models is dramatically widening. Consequently, the AI training processes need to turn greener and more energy efficient.

For a given use-case, the search for the optimal solution should meet multi-objective trade-offs among accuracy of the trained model, its latency, safety, security, and the overall energy cost of the associated solution. The latter means not only the energy consumed during the inference phase but also considering the frequency of use of the inference model and the energy needed to train it.

In addition, novel learning paradigms such as transfer learning, federated learning, self-supervised learning, online/continual/incremental learning, local and context adaptation, etc., should be preferred not only to increase the effectiveness of the inference models but also as an attempt to decrease the energy cost of the learning scheme. Indeed, these schemes avoid retraining models from scratch all the times or reduce the number and size of the model parameters to transmit back and forth during the distributed training phase.

Although significant efforts have been focused in the past to enable ANN-based inference on less powerful computing integrated circuits with lower memory size, today, a considerable challenge to overcome is that non-trivial DL-based inference requires significantly more than the 0.5-1 MB of SRAM, that is the typical memory size integrated on top of microcontroller devices. Several approaches and methodologies to artificially reduce the size of a DL model exist, such as quantizing the neural weights and biases or pruning the network layers. These approaches are fundamental also to reduce the power consumption of the inference devices, but clearly, they cannot represent the definitive solution of the future.

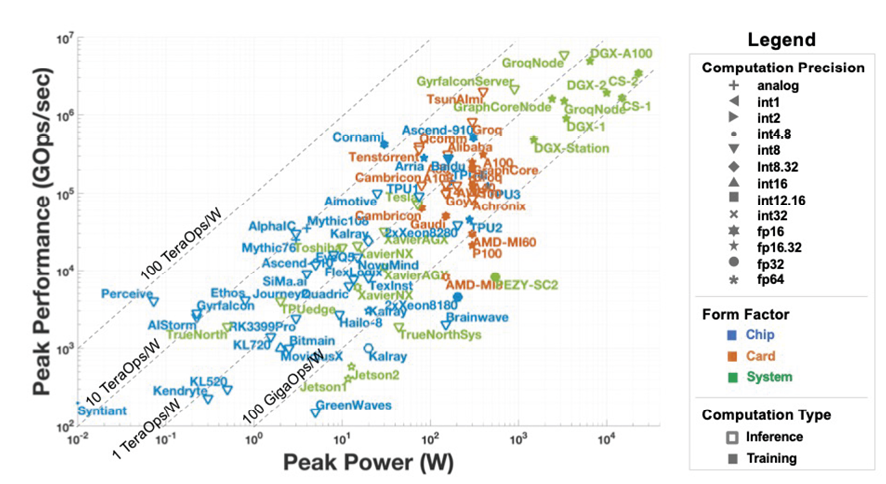

We witness great development activity of computing systems explicitly supporting novel AI-oriented use cases, spanning different implementations, from chips to modules and systems. Moreover, as depicted in the following figure, it covers large ranges of performance and power, from high-end servers to ultra-low power IoT devices.

To efficiently support new AI-related applications, for both, the server and the client on the edge side, new accelerators need to be developed. For example, DL does not usually need a 32/64/128-bit floating point for its learning phase, but rather variable precision including dedicated formats such as bfloats. However, a close connection between the compute and storage parts are required (Neural Networks are an ideal "compute in memory" approach). Storage also needs to be adapted to support AI requirements (specific data accesses, co-location compute and storage), memory hierarchy, local vs. cloud storage.

Similarly, at the edge side, accelerators for AI applications will particularly require real-time inference, in view to reduce the power consumption. For DL applications, arithmetic operations are simple (mainly multiply-accumulate) but they are done on data sets with a very large set of data and the data access is therefore challenging. In addition, clever data processing schemes are required to reuse data in the case of convolutional neural networks or in systems with shared weights. Computing and storage are deeply intertwined. And of course, all the accelerators should fit efficiently with more conventional systems.

Reducing the size of the neural networks and the precision of computation is key to allow complex deep neural networks to run on embedded devices. This can be achieved either by pruning the topology of the networks, and/or by reducing the number of bits storing values of weight and neuron values. These processes can be done during the learning phase, or just after a full precision learning phase, or can be done (with less performances) independently of the learning phase (example: post-training quantization). The pruning principle is to eliminate nodes that have a low contribution to the final result. Quantization consists either in decreasing the precision of the representation (from float 32 to float 16 or even float8, as supported by the Nvidia GPUs mainly for Transformers networks), or to change the representation from float to integers. For the inference phase, current techniques allow to use 8-bit representations with a minimal loss of performances, and sometimes to reduce further the number of bits, with an acceptable reduction of performance or small increase of the size of the network. Most major developments environments (TensorFlow Lite133, N2D2134, etc.) support post-training quantization, and the Tiny ML community is actively using it. Supporting better tools and algorithms to reduce size and computational complexity of Deep Neural Networks is of paramount importance for allowing efficient AI applications to be executed at the edge.

Finally, new approaches can be used for computing Neural-Networks, such as analogue computing, or using the properties of specific materials to perform the computations (although with low precision and high dispersion, but the Neural Networks approach is able to cope with these limitations).

Besides DL, the "Human Brain Project", a H2020 FET Flagship Project which targets the fields of neuroscience, computing, and brain-related medicine, including, in its SP9, the Neuromorphic Computing platform SpiNNaker and BrainScaleS. This Platform enable experiments with configurable neuromorphic computing systems.

Key focus areas

The focus areas rely on Europe maintaining a leadership role in embedded systems, CPS, components for the edge (e.g., sensors, actuators, embedded microcontrollers), and applications in automotive, electric, connected, autonomous, and shared (ECAS) vehicles, railway, avionics, and production systems. Leveraging AI in these sectors will improve the efficient use of energy resources and increase productivity.

However, running computation-intensive ML/DL models locally on edge devices can be very resource-intensive, requiring, in the worst-case, high-end processing units to be equipped in the end devices. Such stringent requirement not only increases the cost of edge intelligence but can also become either unfriendly or incompatible with legacy, non-upgradeable devices endowed with limited computing and memory capabilities. Fortunately, inferring in the edge with the most accurate DL model is not a standard requirement. It means that, depending on the use case, different trade-offs among inference accuracy, power consumption, efficiency, security, safety, and privacy can be met. This awareness can potentially create a permanently accessible AI continuum. Indeed, the real game-changer is to shift from a local view (the device) to the "continuum" (the whole technology stack) and find the right balance between edge computation (preferable whenever possible, because it does not require data transfer) and data transmission towards cloud servers (more expensive in terms of energy). The problem is complex and multi-objective, meaning that the optimal solution may change over time, needing to consider changing cost variables and constraints. Interoperability/compatibility among devices and platforms is essential to guarantee efficient search strategies in this search space.

AI accelerators are crucial elements to improve efficiency and performances of existing systems (to the cost of more software complexity, as described in the next challenge, but one goal will be to automatize this process). For the training phase, the large amount of variable precision computations requires accelerators with efficient memory access and large multi-computer engine structures. In this phase, it is necessary to access large storage areas containing training instances. However, the inference phase requires low-power efficient implementation with closely interconnected computation and memory. In this phase, efficient communication between storage (i.e., the synapses for a neuromorphic architecture) and computing elements (the neurons for neuromorphic) are paramount to ensure good performances. Again, it will be essential to balance the need and the cost of the associated solution. For edge/power-efficient devices, perhaps not ultra-dense technologies are required, cost and power efficiency matter perhaps more than raw computational performances. It is also important to develop better tools and algorithms to reduce size and computational complexity of Deep Neural Networks for allowing efficient AI applications to be executed at the edge.

Other architectures (neuromorphic) need to be further investigated and to find the sweet use case spot. One key element is the necessity to save the neuronal network state after the training phase as reinitializing after switch-off will increase the global consumption. The human brain never stops.

It is also crucial to have a co-optimization of the software and hardware to explore more advanced trade-offs. Indeed, AI, and especially DL, require optimized hardware support for efficient realization. New emerging computing paradigms such as mimicking the synapses, using unsupervised learning like STDP (Spike-timing- dependent plasticity) might change the game by offering learning capabilities at relatively low hardware cost and without needing to access large databases. Instead of being realized by ALU and digital operators, STDP can be realized by the physics of some materials, such as those used in Non-Volatile Memories. These novel approaches need to be supported by appropriate SW tools to become viable alternatives to existing approaches.

Developing solutions for AI at the edge (e.g., for self-driving vehicles, personal assistants, and robots) is more in line with European requirements (privacy, safety) and know-how (embedded systems). Solutions at the extreme edge (small sensors, etc.) will require even more efficient computing systems because of their low cost and ultra-low power requirements.

2.1.5.4 Major Challenge 2: Managing the increasing complexity of system

State of the art

The increasing complexity of electronic embedded systems, hardware and software algorithms has a significant impact on the design of applications, engineering lifecycle and the ecosystems involved in the product and service development value chain.

The complexity is the result of the incorporation of hardware, software and connectivity into systems, and their design to process and exchange data and information without addressing the architectural aspects. As such, architectural aspects such as optimizing the use of resources, distributing the tasks, dynamically allocating the functions, providing interoperability, common interfaces and modular concepts that allow for scalability are typically not sufficiently considered. Today's complexity to achieve higher automation levels in vehicles and industrial systems is best viewed by the different challenges which need to be addressed when increasing the number of sensors and actuators offering a variety of modalities and higher resolutions. These sensors and actuators are complemented by ever more complex processing algorithms to handle the large volume of rich sensor data. The trend is reflected in the value of semiconductors across different vehicle types. While a conventional automobile contains roughly $330 value of semiconductor content, a hybrid electric vehicle with a full sensor platform can contain up to $1000 and 3,500 semiconductors. Over the past decade, the cost contribution for electronics in vehicles has increased from 18% to 20% to about 40% to 45%, according to Lam Research. The numbers will further increase with the introduction of autonomous, connected, and electric vehicles which make use of AI-based HW/SW components.