5 APPENDIX A: RECOMMENDATIONS AND ROADMAP FOR EUROPEAN SOVEREIGNTY IN OPEN SOURCE HARDWARE, SOFTWARE, AND RISC-V TECHNOLOGIES

The use of open source hardware and software drastically lowers the barrier to design innovative System-On-Chips (SoCs), which is an area that Europe excels in today. Open source is a disruptive approach and strong competences in this field are being developed worldwide, for example in China, the US and India. In order for Europe to become a global player in the field and ensure sovereignty, it is important to put in place a roadmap and initiatives that would permit to consolidate ongoing European activities and to develop new technology solutions for key markets such as automotive, industrial automation, communications, health and aeronautics/defence. Notably open source can be used as a sovereignty tool providing Europe with an alternative to licensing IPs from non-EU third parties. A key success criterion for this is for Europe to develop a fully blown open source ecosystem so that a European fork is possible (i.e., create a fully equivalent variant of a given technology), if necessary.

The realization of such an ecosystem requires a radical change in working across the board with leadership and contribution from major European industrial and research players and other value chain actors. An approach similar to the European Processor Initiative which brings together key technology providers and users in the supply chain is needed, but with the goal of producing open source IP. This report suggests the following way forward to foster the development of an open source ecosystem in Europe:

- Build a critical mass of European open-source hardware/software that will permit to drive competitiveness, enable greater and more agile innovation, and give greater economic efficiency. At the same time, it will remove reliance on non-EU developed technologies where there are increasing concerns over security and safety. In building this critical mass, it is important to do so strategically by encouraging the design of scalable and re-usable technology.

- Develop both open source hardware and software as they are interdependent. An open-source approach to software such as EDA and CAD2 tools can serve as a catalyst for innovation in open-source hardware. To ensure a thriving ecosystem, it is necessary to have accessible software.

- Address cross-cutting issues. In order to enable verticalisation, it is important to address a number of cross-cutting issues such as scalability, certification for safety in different application domains, and security. This requires consideration at both the component level and system level.

- Cultivate innovation facilitated through funding from the public sector that is conditional on an open-source approach. The public sector can also have a role in aiding the dissemination of open-source hardware through the deployment of design platforms that would share available IP especially those that were supported through public funds.

- Engage with the open-source community. Links with initiatives such as OpenHW Group, CHIPS Alliance, RISC-V International etc. should be strengthened to get and maintain industrial-grade solutions whilst encouraging standardisation where appropriate and keeping links with global open source communities.

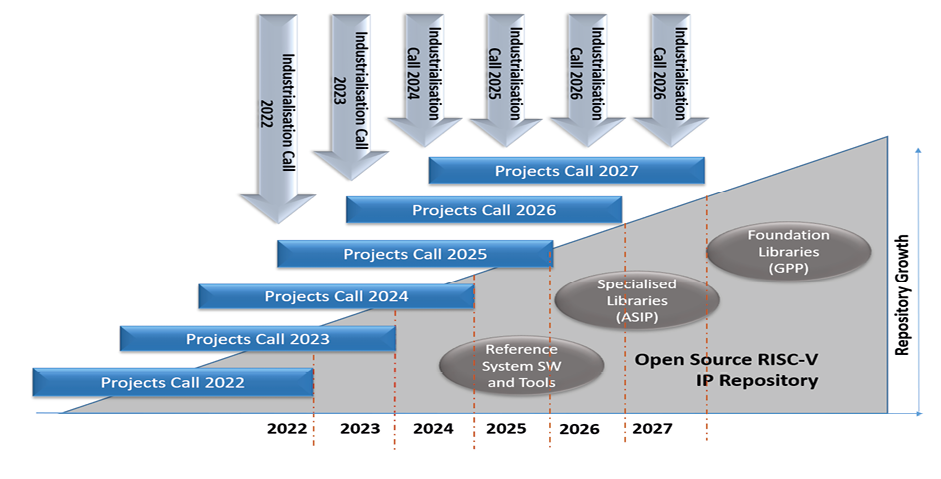

To this end, the Working Group that is at the origin of this report has defined a strategic roadmap considering short (2-5 years), medium (5-10 years) and long term (>10 years) goals. The success of the roadmap depends on European actors working closely together to create a critical mass of activities that enhance and expand the European open source community and its influence on the world stage. The Working Group advocates that this roadmap of activities is supported via coordinated European level actions to avoid fragmentation and ensure that Europe retains technological sovereignty in key sectors.

Europe has a core competence in designing innovative System-On-Chips (SoC) for key application sectors such as automotive, industrial automation, communications, health and defense. The increasing adoption of open source, however, is potentially disruptive as it drastically lowers the barrier to design and to innovation. Already nations such as China, the US and India are investing heavily in open source HW and SW to remain competitive and maintain sovereignty in key sectors where there are increasing concerns over security and safety.

Europe needs to respond by creating a critical mass in open source. The development of a strong European open source ecosystem will also drive competitiveness as it enables greater and more agile innovation at much lower cost. However, to achieve this there is a need to align and coordinate activities to bring key European actors together.

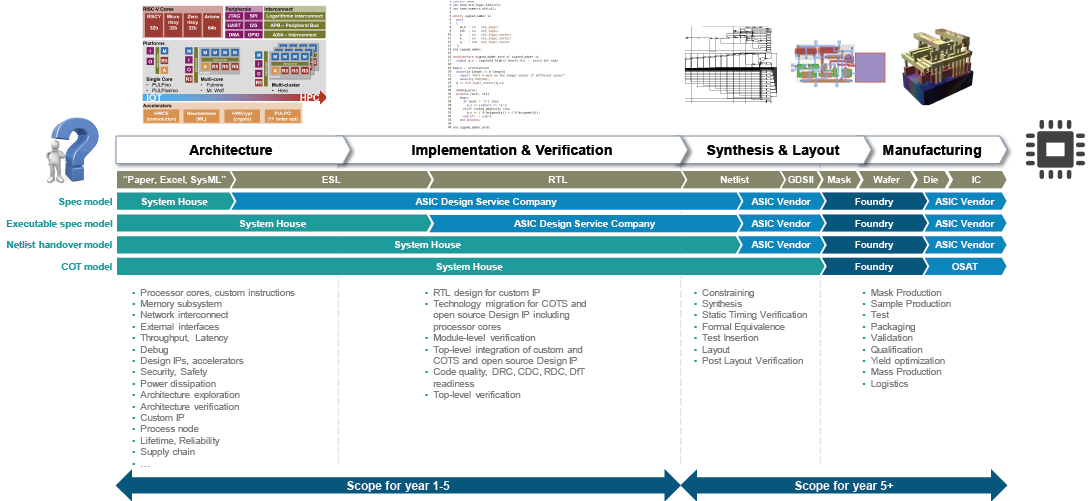

This document proposes a high-level roadmap for Open Source HW/SW and RISC-V based Intellectual Property (IP) blocks which are of common interest to many European companies and organizations, considering design IP and supporting EDA tools. The aim of the roadmap is to consolidate ongoing European activities through the presentation of a number of concrete ideas, topics and priority areas for IP-blocks and future research in order to build sovereignty and competitive advantage in Europe towards future energy-efficient processor design and usage.

Chapter 2 presents an initial approach to open source, shows how sovereignty can be addressed via open source, and what Europe needs to do to create a strong and competitive critical mass in this area. This is followed by a roadmap for open-source hardware in Europe in Chapter 3, which also highlights the necessary toolbox of open-source IP for non-proprietary hardware to thrive. Chapter 4 presents the supporting software required for the ecosystem to flourish. Chapter 5 discusses some cross-cutting issues – such as scalability, safety and security – for a potential RISC-V processor in common use. Chapter 6 identifies gaps in the current European ecosystem for both hardware and software. In Chapter 7, some important elements of the proposed roadmap are discussed. In turn, Chapter 8 lists the short-, mid- and long-term needs for the different elements of the proposed roadmap. Chapter 9 presents a series of recommendations of both a global and specific nature. Chapter 10 lists a series of horizontal and vertical activities to address the needs of open-source development within Europe. Some concluding remarks and two annexes complete the report.

5.3.1 Introduction

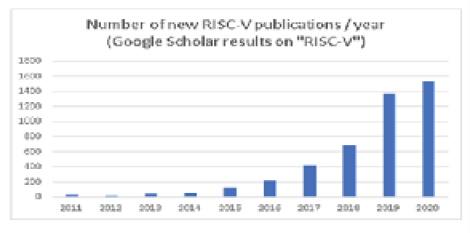

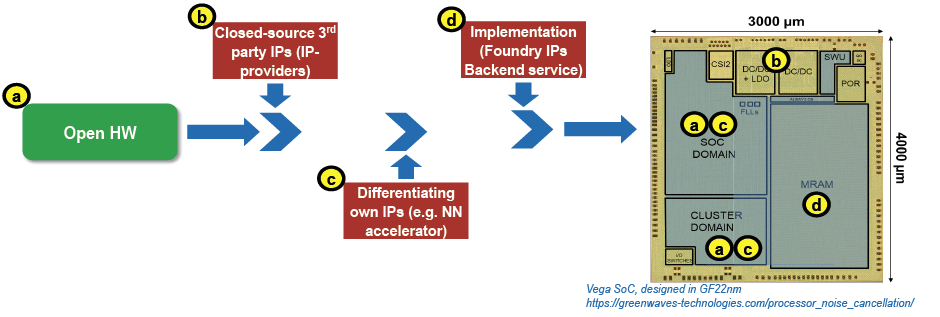

The interest in open source is rapidly rising as shown in Figure 5.1 with the number of published academic papers exploding in the area of RISC-V. This presents a major discontinuity for SoC design. The use of open source drastically lowers the barrier to design innovative SoCs which is an area that Europe excels in and strongly depends on in key application sectors. Use of open source also allows a research center or company to focus its R&D effort on innovation, leveraging an ecosystem of pre-validated IP that can be freely assembled and modified. This is disruptive in a market where traditionally a set of IPs is designed in house at a cost that is only accessible to a few companies, or where alternatively 3rd party IPs are licensed by companies with constraints on innovation due to architecture. A key advantage of open source is that it provides a framework that allows academia and companies to cooperate seamlessly, leading to much faster industrialization of research work.

There are many parallels between open source hardware and open source software. There have been key exemplars in the software domain such as GNU and Linux. The latter started as an educational project in 1991 and has now become dominant in several verticals (High Performance Computing (HPC), embedded computing, servers, etc.) in synergy with open source infrastructure such as TCP/IP network stacks.

While there are a lot of commonalities in term of benefits, pitfalls and the transformative potential of the industry, there are also significant differences between open source hardware and open source software coming from the physical embodiment of any Integrated Circuit (IC) design via a costly and timely process.

The ecosystem is wide ranging and diverse including the semiconductor industry, verticals and system integrators, SMEs, service providers, CAD tools providers, open source communities, academics and research. It is an area with many opportunities to create innovative start-ups and service offers. The benefits and attraction of adoption of open source depends on the type of actor and their role within the value chain, and can include creating innovative products with lower costs and access barriers, and providing a faster path to innovation.

Annex A gives more details on open source, including potential benefits, the open source ecosystem, key players, business strategies, licensing models, and licensing approaches.

5.3.2 Current and growing market for RISC-V

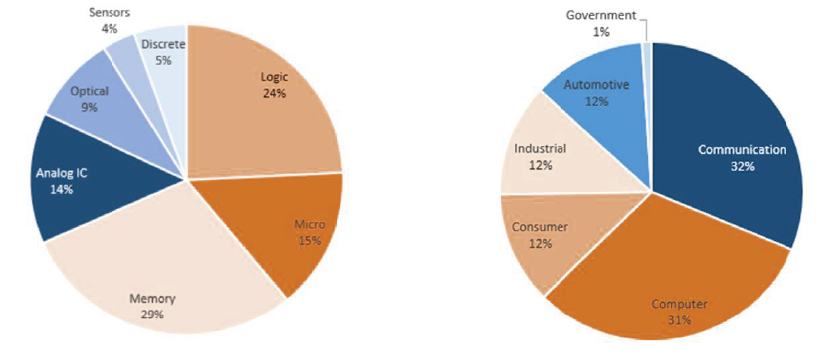

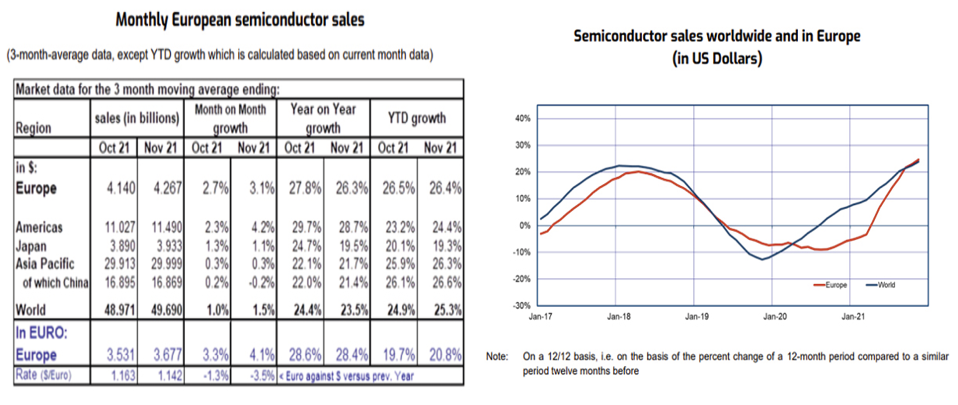

Annex B presents an overview of the market trends in the global chips market. The value of this market in 2021 was around USD $550 billion and is expected to grow to USD $1 trillion in 2030.

RISC-based solutions are being used in a growing range of applications and the uptake of RISC-V is expected to grow rapidly.

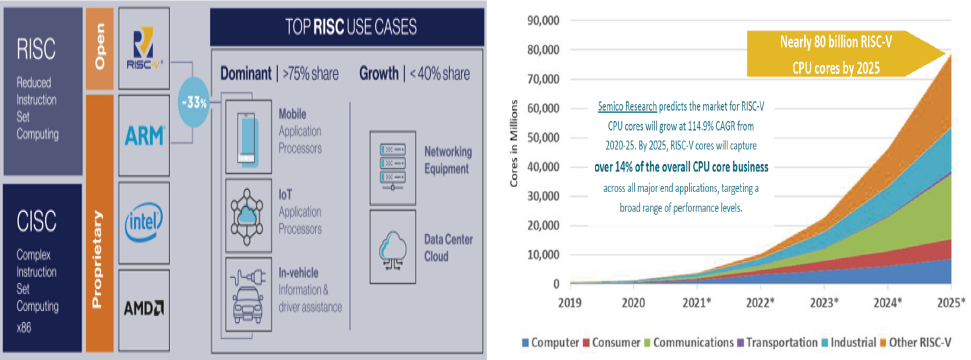

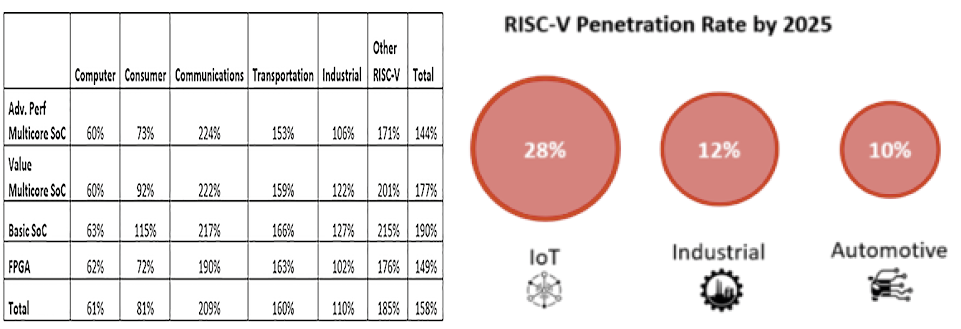

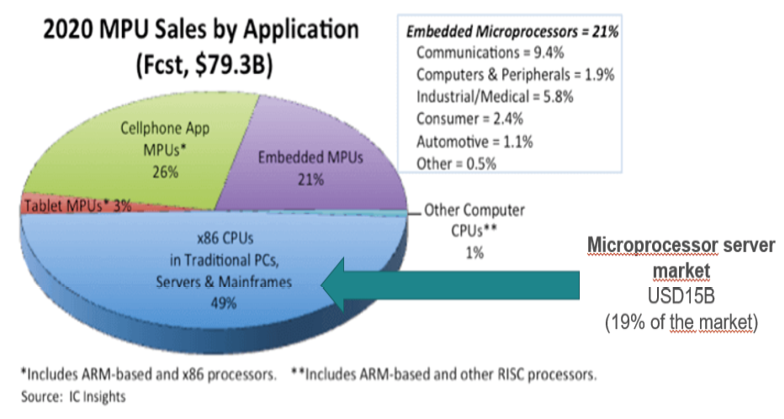

Within these expanding markets RISC-based architectures have become important. RISC platforms have long enabled mobile phone and small-device consumer electronics vendors to deliver attractive price, performance and energy use compared to complex instruction set computing (CISC)-based solutions such as those of Intel and AMD. Also increasingly, RISC-based solutions are being used to power servers, moving beyond their small-device origins3 as shown in Figure 5.2 with a rapid growth in the uptake of RISC-V4.

The compound annual growth rate for RISC-V from 2018-2025 is given in Figure 5.25. In key European markets, the RISC-V penetration rate is expected to grow rapidly6. According to “Wilson Research Group Functional Verification” in 20207, 23% of projects in both the ASIC and FPGA spaces incorporated at least one RISC-V processor.

The current RISC-V instruction set is very popular with RTOs and academics and is also attracting vertical companies that want to develop their own in-house semiconductors (Western Digital8, Alibaba9, …). This has led to a number of start-up companies adopting RISC-V to develop specific processors or processor families to sell to the market. Some European examples of success stories, are:

- Greenwaves Technologies (founded in 2014): ultra-low power and AI-enabled GAP processor family for energy constrained products such as hearable, wearables, IoT and medical monitoring products.

- Codasip (founded in 2014): their processor core portfolio combines two complementary processor families, Codasip RISC-V Processors and SweRV Cores, to cover a wide range of design needs. Their business consists in selling of the standard cores that they have developed, as well as to customize the cores to the needs of the customer.

- Cobham Gaisler (founded in 2001): NOEL-V processor family based on 32-bit and 64-bit RISC-V including fault-tolerant implementation and Hypervisor extension, focusing on application of processors in harsh environments.

5.3.3 European Strategy

Open source shows lots of promise to boost the economy and welfare in our societies, but this is a non-trivial task. As illustrated with the RISC-V example, a generally agreed mechanism is to use open source to create or enlarge markets and to use proximity, prime-mover and other effects to benefit preferentially from those new markets, combining mostly publicly funded open source and privately funded proprietary offerings. This is well known already for software, and the aim is to replicate this for hardware, allowing the research and industry community to focus on new innovative points, rather than on re-developing a complex framework such as a processor and its ecosystem (compiler, interfaces, ...). There is no way to "protect IP" while still building real open source other than by providing adequate financing for Europe-based entities who work with open source as well as support for regional initiatives and leadership in identifying practical problems that can be solved. There is also a need to help connect open source vendors with customers in need of the innovation. Speed from concept to product is also key to stay ahead of non-European competitors. This requires foundries and EU financing to build an ecosystem that can get from concept to products quickly in order to maintain European sovereignty.

Europe is starting initiatives in terms of public adoption of Linux or Matrix in governmental institutions and other areas, and hardware presents a unique opportunity where the ecosystem is immature enough that it could really make a difference. Education is a key factor which could help. Talented and educated Europeans tend to stay in Europe, so significantly boosting open source hardware education could be a way to seed a strong ecosystem. RISC-V is already part of the curriculum in many universities and Europe could further fund open source hardware education at an even earlier level and generally strongly encourage universities to produce open source.

The success of an open source project requires efforts going beyond putting some source code in a public repository. The project needs to be attractive and give confidence to its potential users. It is said that the first 15 minutes are the most important. If a potential user cannot easily install the software (in case of open source software), or does not understand the structure, the code and the parameters, it is unlikely that the project will be used. Having good documentation and well written code is important. This requires time and effort to clean the code before it is made accessible. It is also necessary to support the code during its lifetime which requires a team to quickly answer comments or bug reports from users and provide updates and new releases. All modifications should be thoroughly checked and verified, preferably via automated tools. Without this level of support potential users will have little trust in the project. There is thus a need for support personnel and computing resources. This is a challenge for European Open Source projects as the code needs to be maintained after the official end of the project. Only if a project is attractive, provides innovation, is useful and is trusted, will it attract more and more users and backers who can contribute, correct bugs, etc. At this point it can be self-supported by the community with less effort from its initial contributors. The efforts and resources required to support an open source project should not be under-estimated. The project also needs to be easily accessible and hosted in a reliable industrial environment such as the Eclipse foundation, where the Open Hardware Group can provide support for ensuring the success of an open source project.

Success of open source depends not only on the IP blocks but also on the documentation, support and maintenance provided. This requires significant resources and personnel.

It is important to help steer Europe towards open source hardware and make sure that knowledge is shared, accessible, maintained and supported on a long-term basis. Barriers to collaboration need to be eliminated. Priorities should be discussed considering what is happening elsewhere in the world and to maximize the value generated within Europe there is a need to build value chains, develop a good implementation plan and provide long-term maintenance and support.

A more detailed economic view on the impact of Open Source HW and SW on technological independence, competitiveness and innovation in Europe has been documented in a report edited by Fraunhofer ISI and OpenForum Europe17.

5.3.4 Maintaining Europe’s Sovereignty and Competitiveness

A key market challenge is that most of the current open source hardware technology resources are located outside of Europe, especially in the United States, in China (which has strongly endorsed RISC-V based open source cores) and India which has a national project. This raises the question: how can Europe maintain sovereignty and stay competitive in a rapidly developing open source market? The traditional value of the European landscape lies in its diversity and collaborative nature which reflects the nature of open source very well. EU funding looks favorably on open source solutions and there are well-established educational institutions which are of a less litigious / patent-heavy nature as compared to US private universities. The diversified industry within Europe is also a strength that can be leveraged to push the EU’s competitiveness in open hardware.

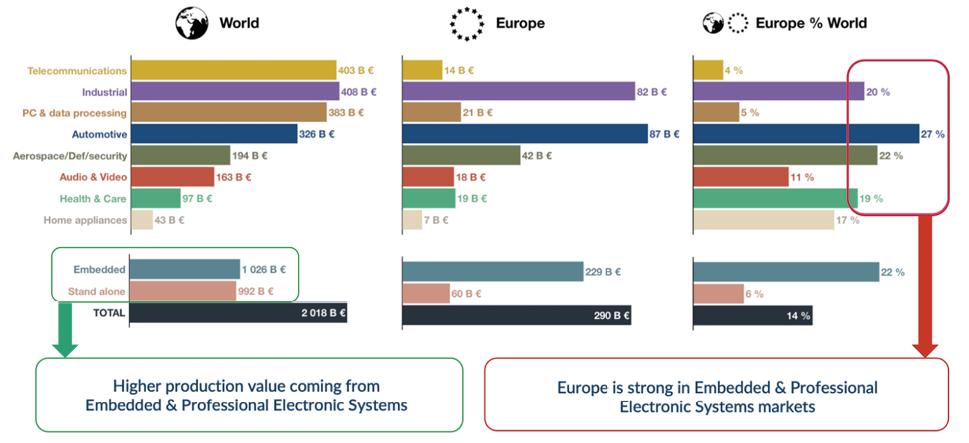

To get sufficient return on investment for Europe, a focus should be given to application domains where there is stronger impact: automotive, industrial automation, communications, health, defense, critical systems, IoT, cybersecurity, etc. These application domains convey specific requirements towards open source technologies: safety, security, reliability, power and communication efficiency.

A key to sovereignty in the context of open source is the involvement of sufficient European actors in the governance of the various projects (CHIPS alliance, OpenHW group, etc.) and the achievement of a critical mass of EU contributors to these projects so that a fork could be pursued if this is forced onto EU contributing members.

Competitiveness in the context of open source should be looked at in a reversed manner, that is, how much competitiveness European players would lose by not adopting open source. Open source is becoming a major contributor to innovation and economic efficiency of the players who broadly adopt the approach. The massive on-going adoption of open source in China, from leading companies to start-ups and public research centers, with strong support from both the central and regional authorities, is a very interesting trend in China’s strategy to catch up in semiconductors.

Recommendation – Calls by the Key Digital Technologies Joint Undertaking (KDT JU) should bring benefits to open hardware. Use of this hardware by proprietary demonstrations (including software, other hardware, mechanical systems, in key sectors automotive, industrial automation, etc.) would be beneficial for pushing acceptance of open hardware. Calls could formulate a maximum rate (e.g. 30% of volume) for such demonstrations. It should be required that proposals do not merely implement open interfaces (e.g. RISC-V) but also release implementations (e.g. at least 50% of volume). It is reasonable to demand that the open source licenses of such released implementations allow combination with proprietary blocks.

While this document focuses on open source IC design IPs, the major control point from a sovereignty standpoint is EDA (Electronic Design Automation) tools, which are strictly controlled by the US, even for process nodes for which Europe has sovereign foundries. Europe has significant capabilities in this area in research, which it regularly translates into start-ups for whom the only exit path currently is to be acquired by US controlled firms. Open source EDA suites exist but are limited to process nodes for which PDK is considered as a commodity (90nm and above). A possible compromise approach, complementary to the development of open source EDA, is to emphasize compatibility of open source IP design with proprietary, closed source design tools and flows. This objective can be achieved with efforts on two fronts: (I) promote licensing agreement templates for commercial EDA tools that explicitly allow the development of open hardware: this is especially important when open hardware is developed by academic partners in the context of EU-funded R&D actions, as current academic licenses (e.g., as negotiated by EUROPRACTICE) can often not be used for commercial industrial activities. And (II) even more importantly, emphasis should be put in promoting open standards for data exchange of input and outputs of commercial EDA tools (e.g., gate-level netlists, technology libraries). Proprietary specifications and file exchange formats can hinder the use of industry-strength tools for the development of open source hardware. This matter requires a specific in-depth analysis to propose an actionable solution.

5.3.5 Develop European Capability to Fork if Needed

Open source also brings more protection against export control restrictions and trade wars, as was illustrated in 2019 when the US Administration banned Huawei from integrating Google proprietary apps in their Android devices. However, Huawei was not banned from integrating Android as it is open source released. Regarding US export rules, a thorough analysis is provided by the Linux Foundation18.

Even for technologies developed in other parts of the world that have been open sourced and adopted in Europe at a later stage, the ability to potentially “fork” i.e., create a fully equivalent variant of a given technology is a critical capability from a digital sovereignty perspective. Should another geopolitical block decide to disrupt open source sharing by preventing the use of future open source IPs by EU players, the EU would need to carry on those developments with EU contributors only, or at least without the contribution of the adversary block. For this there needs to be a critical mass of European contributors available to take on the job if necessary.

The realization of such a critical mass will require an across-the-board change of working, with leadership and contribution from major European players (industrial and research) as well as a myriad other contributors. To achieve this there is a need to build or take part in sustainable open source communities (OpenHW Group, CHIPS Alliance, etc.) to get and maintain industrial-grade solutions. Care needs to be taken to avoid fragmentation (creating too many communities) or purely European communities with a disconnection from global innovation. A challenge is that current communities are young and essentially deliver processor cores and related SW toolchains. They need to extend their offer to richer catalogues including high-end processors, interconnects, peripherals, accelerators, Operating Systems and SW stacks, IDE and SDK, extensive documentation, etc.

Open source hardware targeting ASIC implementations requires software tools for implementation where licensing costs for a single implementation quickly amount to several hundred thousand Euro. There is thus a need for high quality open source EDA tools supporting industrial-grade open source IP cores. Europe also has a low footprint in the world of CAD tools, which are critical assets to design and deliver electronics solutions. Recent open source CAD tool initiatives are opportunities to bridge this gap.

Considering processors involvement in RISC-V International should be encouraged to influence and comply with the “root” specifications. RISC-V standardizes the instruction set, but additional fields will be needed in future for interoperability: SoC interconnect, chiplet interfaces, turnkey trust and cybersecurity, safety primitives, etc. Europe should promote open source initiatives that would help maximize collaborations and reach a significant European critical mass. This is a key issue if Europe is to compete with China and the USA. A significant portion of the intellectual property produced in these European initiatives should be delivered as open source, so that European actors can exploit these results.

5.4.1 Processors (RISC-V, beyond RISC-V, ultra-low power and high-end)

The Working Group has identified the strategic key needs for the development and support for:

1. a range of different domain focused processors,

2. the IP required to build complete SoCs and,

3. the corresponding software ecosystem(s) for both digital design tools and software development.

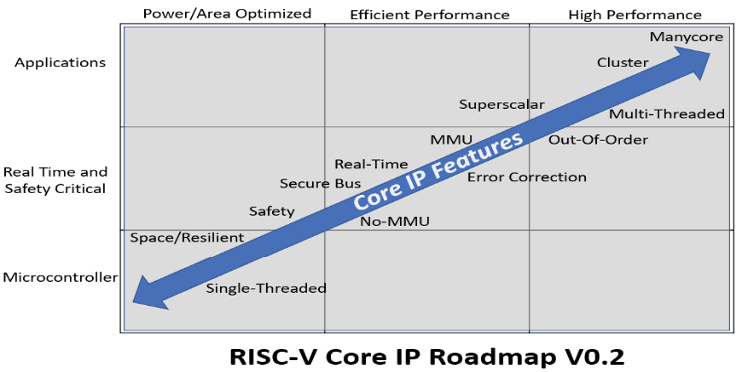

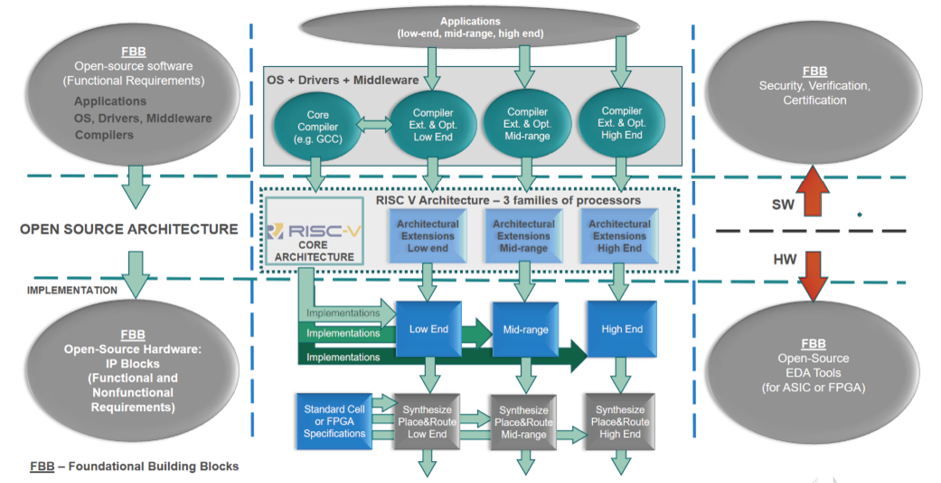

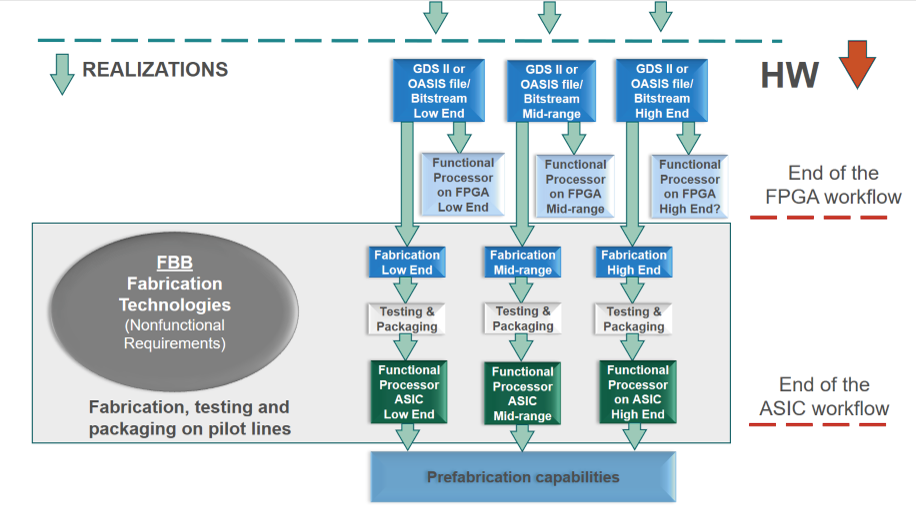

To align the software roadmap to the hardware IP core development roadmap, efforts should be focused on supporting RISC-V implementations that correspond to the RV64GC and RV32GC set of extensions. At the same time the creation/adoption of RISC-V profiles (microprocessor configurations) should be encouraged in the community. To scope this there is a need for an overall roadmap for RISC-V microprocessor core IP for specific market focus areas. A challenge is that microprocessor core IP comes in many shapes and sizes with differing requirements to meet the needs of applications ranging from 'toasters to supercomputers' as shown in Figure 5.4. In order to pursue this the Working Group has concentrated on a subset of this broader landscape whilst leveraging the underlying logic and thought process.

To be successful the IP roadmap must encompass attributes such as performance, scalability, software compatibility and deliver high levels of architectural reuse over many years of robust product creation. The focus has been on microprocessor core IP that drives system solutions ranging from edge IOT devices to manycore compute platforms which are key to many European applications. This needs to meet the challenges of scalability and must be sustainable in years to come. At the same time software and digital design tooling needs to be developed to support the foundational RISC-V IP. A key aim has been to leverage global open source hardware and software projects to maximize European reuse, avoid duplication and strategically direct investment.

5.4.2 Accelerators – Domain Specific Architectures

Accelerators are a key need and for many application domains, as meeting non-functional requirements (e.g., performance, energy efficiency, etc.) is often difficult or impossible using general-purpose processor instruction sets such as RV32IM or RV64GC. This is true, for example, in machine learning and cryptography, but it also applies to high-performance storage and communications applications. The use of extended instruction sets to enable more parallel processing, such as RISC-V “P” for Single Input Multiple Data (SIMD) processing and RISC-V “V” for full-scale vector processing, can provide significant performance and/or efficiency gains, however, even higher gains are achievable in many cases by adding application domain-specific features to a hardware architecture.

This has led to the concept of Domain-Specific Architectures (DSA) which was highlighted by computer architecture pioneers John Hennessy and David Patterson in their 2018 Turing Award Lecture19 (the “Nobel Prize” of computer science and computer engineering). Here Domain-Specific Architectures were noted as one of the major opportunities for the further advances in computer architecture to meet future non-functional targets.

Accelerators are one approach to implement a DSA. They do not provide all of a system’s functionality, but instead assist more general-purpose processors by the improved execution of selected critical functions. In many cases, this “improvement” means “faster” execution, leading to the name “accelerator”, however, it can also mean “more energy efficient” or “more secure”, depending on the requirements. In order for the accelerator to exceed the capabilities of a general-purpose system on a similar semiconductor process node, the micro-architecture of the processor is highly specialized and very specific to the actual algorithm it implements. For example, machine-learning accelerators dealing with the efficient inference for Artificial Neural Networks will have very different micro-architectures depending on whether they aim to operate on dense or sparse networks. They, in turn, will have very different architectures from accelerators dealing, e.g., with 3D-Stereovision computations in computer vision for autonomous driving, or Quantum Computing-resistant cryptography for secure communications.

In most cases, accelerators rely on conventional digital logic and are designed/implemented/verified as is usual for the target technology (e.g., FPGA, ASIC). They would thus profit immediately from all open source advances in these areas, e.g., open source EDA tools. Open sourcing the accelerator designs themselves will benefit the ecosystem by encouraging reuse, standardization of testing and verification procedures and in inducing more academic interest and research. Some open sourced accelerators have seen widespread use. Successful cases include NVIDIA’s NVDLA20 Machine Learning inference accelerator, or the 100G hardware-accelerated network stack from ETHZ21.

The opportunity for much higher impact when using open source for accelerators lies not so much in the accelerators themselves, but in the hardware and software interfaces and infrastructures enabling their use.

Accelerators are integrated into the surrounding computing system typically in one of three approaches (although others exist, e.g., network-attached accelerator appliance).

Custom Instructions: At the lowest level, the accelerator functions can be tightly integrated with an existing processor pipeline and are accessed by issuing special instructions, sometimes called custom instructions or ISA eXtensions (ISAX). This model is suitable for accelerator functions that require/produce only a limited amount of data when operating and thus easily source their operands and sink their result from/to one of the processor’s existing register files (e.g., general-purpose, floating-point or SIMD). The key benefit of this approach is the generally very low overhead when interacting with the accelerator from software. This approach has been exploited for decades in processors such as the proprietary Tensilica and ARC cores and is also used, for example, in Intel’s AES-NI instructions for accelerating Advanced Encryption Standard (AES) cryptography. In the RISC-V domain, companies such as Andes Technologies (Taiwan) emphasize the easy extensibility of their cores with custom functionality. In the open source area, ETHZ has implemented their Xpulp22 instructions, showing significant performance gains and code-size reduction over the base RISC-V ISA.

Custom instructions generally require deep integration into a processor’s base microarchitecture that is often difficult with many proprietary offerings, e.g., from Arm. In the open source RISC-V hardware ecosystem, though, custom instructions have become a very effective means towards Hennessy & Patterson’s Domain-Specific Architectures. Examples of custom functionality added in this manner include Finite-Field Arithmetic for Post-Quantum Cryptography 23, digital signal processing 24, or machine-learning25.

However, due to their need for deep integration into the base processor, such custom instructions are generally not portable between cores. What would be desirable is a lightweight, flexible interface for the interactions between base core and the accelerator logic realizing the custom functionality. This interface would need to be bidirectional as for example instruction decoding would still be performed by the base core, and only selected fields of the instruction would be communicated to the accelerator. The accelerator, in turn, would pass a result to the base core for write-back into the general-purpose register file. Additional functionality required includes access to the program counter computations, to realize custom control flow instructions (this is missing from UCB’s RoCC interface), and the load/store-unit(s) for custom memory instructions. A key aspect of an efficient custom instruction interface will be that the unavoidable overhead (hardware area, delay, energy) is only paid for those features that are actually used. For example, a simple Arithmetic Logic Unit (ALU) compute operation should not require interaction with the program counter or memory access logic. With a standard (de-facto or formal) interface in place, R&D work on tightly integrated accelerators could port across different base processors.

Loosely Coupled Accelerators: More complex accelerators that require or produce more data than can easily be provided/accepted by a base processor core are generally not integrated into the core pipeline itself but are coupled more loosely using industry-standard protocols such as Arm AMBA. The communication requirements of the accelerator determine the specific flavour of interface used, which can range from Advanced Peripheral Bus (APB) for low-bandwidth interaction, or Advanced High-Performance Bus (AHB)/Advanced eXtensible Interface (AXI) for higher-performance accelerators. These loosely coupled accelerators accept commands from one or more base cores using memory-mapped interactions and are then capable of accessing their input and output data independently using Direct Memory Access (DMA) operations. Since these accelerators are integrated into a system using standard protocols, they are very portable. For example, the open source NVIDIA machine-learning accelerator has been successfully integrated into a number of open source and proprietary Systems-on-Chips.

However, for more complex accelerators, the open source ecosystem is much sparser when advanced capabilities are required. Two examples of this are shared-virtual memory and/or cache-coherent interactions between the accelerators and the software-programmable base cores. Both mechanisms can considerably simplify using the interaction between software on the base cores and accelerators, especially when more complex data structures (e.g., as used in databases) need to be operated on. To achieve shared-virtual memory, the accelerator and the base core(s) need to share address translations, invalidations, and fault-handling capabilities. The UCB RoCC interface achieves this by leveraging the capabilities of the underlying base core (Rocket, in most cases). However, for better scalability across multiple accelerators, it would be beneficial to have a dedicated Input-Output Memory Management Unit (IOMMU) serving just the accelerators, possibly supported by multiple accelerator-specific Translation-Lookaside Buffers (TLBs) to reduce contention for shared resources even further. However, no such infrastructure exists in an open source fashion. Currently, designers following Hennessy & Patterson’s ideas towards accelerator-intensive systems do not just have to design the (potentially complex) accelerators themselves, but they also often have to start “from scratch” when implementing the supporting system-on-chip architecture allowing these accelerators to actually operate. Portable, scalable and easily accessible open source solutions are sorely needed to lower this barrier of entry.

Recommendation - Low-level generic and higher-level domain-specific frameworks should be made available in an easily accessible open source manner. For the lower-level frameworks, the goal should be portable and scalable solutions that support the selected model(s) of computation in a lightweight modular manner.

High-Speed DRAM Interfaces: Accelerators are often employed for highly data-intensive problems (e.g., graphics, vision, machine-learning, cryptography) that need to store significant amounts of data themselves, and/or require high-performance access to data via a network. Thus, the availability of high-speed interfaces to Dynamic Random Access Memory (DRAM), ideally even in the form of forward-looking 2.5D/3D memory technologies such as High Bandwidth Memory (HBM), and to a fast network or peripheral busses such as PCI Express, are absolutely crucial for the use of and research into accelerators. In most cases, it does not make sense to design an accelerator if it cannot interact with sufficient amounts of data. Moving up further in the layers of systems architecture, accelerators are not just used today as IP blocks in a system-on-chip, but also as discrete expansion boards added to conventional servers, e.g., for datacenter settings.

Recommendation - To enable easier design and use of novel accelerator designs open source system-on-chip templates should be developed providing all the required external (e.g., PCIe, network, memory) and internal interfaces (e.g., Arm AMBA AXI, CHI, DRAM and interrupt controllers etc.), and into which innovative new accelerator architectures can be easily integrated. These template SoCs could then be fitted to template PCBs providing a suitable mix of IO and memory (as inspired by FPGA development boards) to the custom SoC. Ideally, all of this would be offered in a “one-stop-shop" like approach, similar to the academic/SME ASIC tape-outs to EUROPRACTICE for fabrication but in this case functional PCIe expansion boards.

This not only applies to Graphics Processing Units (GPUs), as the most common discrete accelerator today, but also to many machine learning accelerators such as Google’s TPU series of devices. This usage mode will become even more common, now that there is progress on the required peripheral interfaces for attaching such boards to a server, specifically: Peripheral Component Interconnect Express (PCIe) has finally picked up again with PCIe Gen4 and Gen5. Not only do these newer versions have higher transfer speeds, they also support the shared-virtual memory and cache-coherent operations between host and the accelerator board, using protocol variants such as Cache Coherent Interconnect for Accelerators (CCIX) or Compute Express Link™ (CXL). Designing and implementing a base board for a PCIe-based accelerator, though, is an extremely complex endeavor. Not just from a systems architecture perspective, but there are also many practical issues, such as dealing with high-frequency signals (and their associated noise and transmission artifacts), providing cooling in the tight space of a server enclosure, and ensuring reliable multi-rail on-board power-supplies.

These issues are much simplified when using one of the many FPGA based prototyping/development boards which have (mostly) solved these problems for the user. High-speed on-chip interfaces are provided by the FPGA vendor as IP blocks, and all of the board-level hardware comes pre-implemented. While these boards are generally not a perfect match to the needs of a specific accelerator (e.g., in terms of the best mix of network ports and memory banks), a reasonable compromise can generally be made choosing from the wide selection of boards provided by the FPGA manufacturers and third-party vendors. This is not available, however, to academic researchers or SMEs that would like to demonstrate their own ASICs as PCIe-attached accelerators. To lower the barrier from idea to usable system in an open source ecosystem, it would be highly desirable if template Printed Circuit Board (PCB) designs like FPGA development boards were easily available, into which accelerator ASICs could easily be inserted, with all of the electrical and integration issues already being taken care of. Providing these templates with well-documented and verified designs will incentivize designers to release their work in the public domain.

Hardware is one aspect of an accelerator, but it also needs to be supported with good software. This can range from generic frameworks, e.g., wrapping task-based accelerator operations using the TaPaSCo26 system, to domain-specific software stacks like Tensor Virtual Machine (TVM 27 for targeting arbitrary machine-learning (ML) accelerators. Combinations of software frameworks are also possible, e.g., using TaPaSCo to launch and orchestrate inference jobs across multiple TVM-programmed ML accelerators 28.

Recommendation - For higher-level frameworks, future open source efforts should be applied to leveraging existing domain-specific solutions. Here, a focus should be on making the existing systems more accessible for developers of new hardware accelerators. Such an effort should not just include the creation of “cookbook”-like documentation, but also scalable code examples that can be easily customized by accelerator developers, without having to invest many person-years of effort to build up the in-house expertise to work with these frameworks.

To enable broader and easier adoption of accelerator-based computing, a two-pronged approach would be most beneficial. Lower-level frameworks, like TaPaSCo, should provide broad support for accelerators operating in different models of computation, e.g., tasks, streams/dataflow, hybrid, Partitioned Global Address Space (PGAS), etc., drawing from the extensive prior work in both theoretical and practical computing fields. Many ideas originating in the scientific and high-performance computing fields originally intended for supercomputer-scale architectures have become increasingly applicable to the parallel system-on-chip domain. Lower-level frameworks can be used to provide abstractions to hide the actual hardware-level interactions with accelerators, such as programming control registers, setting up memory maps or copies, synchronizing accelerator and software execution, etc. Application code can then access the accelerator using a high-level, but still domain-independent model of computation, e.g., launching tasks, or settings up streams of data to be processed by the hardware.

Even higher levels of abstraction, with their associated productivity gains, can be achieved by making the newly designed accelerators available from domain-specific frameworks. Examples include the TVM for machine learning, or Halide29 for high-performance image and array processing applications. These systems use stacks of specialized Intermediate Representations (IR) to translate from domain-specific abstractions down to the actual accelerator hardware operations (e.g., TVM employs Relay30, while Halide can use FROST31). Ideally, to make a new accelerator usable in one of the supported domains would just require development of a framework back-end for mapping from the abstract IR operations to the accelerator operations and the provision of an appropriate cost-model, to allow the automatic optimization passes included in many of these domain-specific frameworks to perform their work.

It should be noted that the development of OpenCL 2.x is a cautionary tale of what to avoid when designing/implementing such a lower-level framework. Due to massive overengineering and design-by-committee, it carried so many mandatory features as baggage that most actual OpenCL implementations remained at the far more limited OpenCL 1.x level of capabilities. This unfortunate design direction, which held back the adoption of OpenCL as a portable programming abstraction for accelerators for many years, was only corrected with OpenCL 3.0. This version contains a tightly focused core of mandatory functionality (based on OpenCL 1.2) supported by optional features that allow tailoring to the specific application area and target accelerators.

From a research perspective, it would also be promising to study how automatic tools could help to bridge the gap between the domain-specific frameworks, e.g., at the IR level, and the concepts used at the accelerator hardware architecture levels. Here, technologies such as the Multi-Level IR (MLIR)

proposed for integration into the open source Low Level Virtual Machine (LLVM32) compiler framework may be a suitable starting point for automation.

5.4.3 Peripherals and SoC Infrastructure

5.4.3.1 SoC Infrastructure

In addition to processor cores, it is also very important to have a complete infrastructure to make SoCs and be sure that all IPs are interoperable and well documented (industry grade IPs). This requires the necessary views of IPs (IDcard with maturity level, golden model, Register-Transfer Level (RTL), verification suite, integration manual, Design For Test (DFT) guidelines, drivers) which are necessary to convince people to use the IPs. As highlighted system-on-chip (SoC) templates are needed that provide all the required external (e.g., PCIe, network, memory) and internal interfaces and infrastructures (e.g., Arm AMBA AXI, Coherent Hub Interface (CHI), DRAM and interrupt controllers, etc.), and into which innovative new IPs could be easily integrated. High-speed lower-level physical interfaces (PHYs) to memories and network ports, are designed at the analog level, and are thus tailored to a specific chip fabrication process. The technical details required to design hardware at this level are generally only available under very strict NDAs from the silicon foundries or their Physical Design Kit (PDK) partners. Providing access to this information would involve inducing a major shift in the industry. As a compromise solution, though, innovation in open source hardware could profit immensely if these lower-level interface blocks could be made available in a low-cost manner, at least for selected chip fabrication processes supported by facilitators such as EUROPRACTICE for low-barrier prototyping (e.g., the miniASIC and MPW programs).

Recommendation - Blocks do not solely consist of (relatively portable) digital logic, but they also have analog components in their high-speed physical interfaces (PHY layer) that must be tailored to the specific ASIC fabrication process used. Such blocks should be provided for the most relevant of the currently EUROPRACTICE-supported processes for academic/SME prototype ASIC runs. The easy exchange of IP blocks between innovators is essential leveraged by both the EDA companies and IC foundries. An exemplar is the EUROPRACTICE enabled R&D structure of CERN where different academic institutions collaborate under the same framework to exchange IP.

For open source hardware to succeed, standard interfaces such as DRAM controllers, Ethernet Media Access Controllers (MACs) and PCIe controllers need to be either available as open source itself, or at a very low cost at least for academic research and SME use. If they are not, then the innovation potential for Europe in both of these areas will often go to waste, because new ideas simply cannot be realized and evaluated beyond simulation in real demonstrators/prototypes. For a complete SoC infrastructure, it is necessary to have an agreed common interconnect at the:

- processor/cache/accelerator level (similar to the Arm Corelink Interconnect, or the already existing interconnect specifications for RISC-V such as TileLink.33): in the open source area, support for cache-coherency exists in the form of the TileLink protocol, for which an open source implementation is available from UC Berkeley. However, industry standard protocols such as AMBA ACE/CHI do not have open source implementations (even though their licenses permit it). This makes integration of existing IP blocks that use these standard interfaces with open source systems-on-chips difficult.

- memory hierarchy level, for example between cores for many-core architectures (NUMA effects) and between cores and accelerators,

- peripheral level (e.g., Arm’s AMBA set of protocols).

The communication requirements of the accelerators determine the specific flavor of interface used, which can range from APB for low-bandwidth interaction, or AHB/AXI for higher-performance accelerators.

5.4.3.2 Networks on a Chip

Networks on a Chip (NoCs) and their associated routers are also important elements for interconnecting IPs in a scalable way. Depending on requirements, they can be synchronous, asynchronous (for better energy management) or even support 3D routing. Continuous innovations are still possible (and required) in these fields.

5.4.3.3Verification and Metrics

IPs need to be delivered with a verification suite and maintained constantly to keep up with errata from the field. For an end-user of IP the availability of standardized metrics is crucial as the application scenario may demand certain boundaries on power, performance, or area of the IP. This will require searches across different repositories with standardized metrics. One industry standard benchmark is from the Embedded Microprocessor Benchmark Consortium (EEMBC)34, however, the topic of metrics does not stop at the typical performance indicators. It is also crucial to assess the quality of the verification of the IP with some metrics. In a safety or security context, it is important for the end-user to assess in a standardized way the verification status in order to conclude what is still needed to meet required certifications. Using standardized metrics allows end users to pick the most suited IP for their application and get an idea on needed additional efforts in terms of certifications.

Another aspect is in providing trustworthy electronics. This is a continuous effort in R&D, deployment and operations, and along the supply chains. This starts with trustworthy design IPs developed according to auditable and certifiable development processes, which give high verification and certification assurance (safety and/or security) for these IPs. These design IPs including all artefacts (e.g., source code, documentation, verification suites) are made available ensuring integrity, authenticity and traceability using certificate-based technologies. Traceability along the supply chain of R&D processes is a foundation for later traceability of supply chains for components in manufacturing and deployment/operation.

5.4.3.4 Chiplet and Interposer Approach

Another domain which is emerging and where Europe can differentiate itself is in using the 2.5D approach, or “chiplets + interposers”. This is already enabled by EUROPRACTICE for European academics and SMEs. The idea is to assemble functional circuit blocs (called chiplets, see https://en.wikichip.org/wiki/chiplet) with different functions (processor, accelerator, memories, interfaces, etc.) on an “interposer” to form a more complex System-on-Chip. The interposer technology physically hosts the chiplets and ensures their interconnection in a modular and scalable way, like discrete components on a Printed Circuit Board. This approach can range from low-cost systems (with organic interposers), to high-end silicon-based passive and active interposers, up to photonic interposers. In active interposers, the interposer also includes some active components that can help with interconnection (routers of a NoC), interfacing or powering (e.g., Voltage converters).

The industry has started shifting to chiplet-based design whereby a single chip is broken down into multiple smaller chiplets and then “re-assembled” thanks to advanced packaging solutions. TSMC indicates that the use of chiplets will be one of the most important developments in the next 10 to 20 years. Chiplets are now used by Intel, AMD and Nvidia and the economics of this has already been proved by the success of the AMD chiplet-based Zen architecture. As shown by the International Roadmap for Devices and Semiconductors (IRDS) roadmap, chiplet-based design is considered as a complementary route for More Moore scaling.

Chiplet-based design is an opportunity for the semiconductor industry, but it creates new technical challenges along the design value chain: architecture partitioning, chiplet-to-chiplet interconnect, testability, CAD tools and flows and advanced packaging technologies. None of the technical challenges are insurmountable, and most of them have already been overcome through the development and characterization of advanced demonstrators. They pave the way towards the “domain specific chiplet on active interposer” route for 2030 as predicted by the International Roadmap for Devices and Semiconductors.

With chiplet-based design, the business model moves from a soft IP business to a physical IP business, one in which physical IP with new interfaces is delivered to a new actor who integrates it with other outsourced chiplets, tests the integration and sells the resulting system. According to Gartner, the chiplet market size (including the edge) will grow to $45B in 2025 and supporting chiplet-based design tools are available. A challenge is that the chiplet eco-system has not yet arrived due to a lack of interoperability between chiplets making chiplet reuse difficult. For instance, it is not possible to mix an AMD chiplet with a XILINX one to build a reconfigurable multi-core SoC. Die-to-Die (D2D) communication is the “missing link” to leverage the chiplet-based design ecosystem, and its development in open source would enable a wide usage and could become a “de-facto” standard.

The Die-to-Die interface targets a high-bandwidth, low-latency, low-energy ultra-short-reach link between two dies. Different types of interfaces exist and the final choice for a system lies in the desire to optimize six often competing, but interrelated factors:

1. Cost of packaging solutions

2. Die area per unit bandwidth (square mm per Gigabits per second)

3. Power per bit

4. Scalability of bandwidth

5. Complexity of integration and use at the system level

6. Realizability in any semiconductor process node

The ideal solution is an interconnect technology that is infinitely scalable (at fine-grained resolution), low power, area-efficient, totally transparent to the programming model, and buildable in a low-cost silicon and packaging technology. There are two classes of technologies that service this space:

- Parallel interfaces: High-Bandwidth Interface (HBI), Advanced Interface Bus (AIB) and “Bunch of Wires” (BoW) interfaces. Parallel interfaces offer low power, low latency and high bandwidth, but at the cost of requiring many wires between Die. The wiring requirement can only be met using Silicon interposer or bridging technology.

- Serial Interfaces: Ultra Short and eXtra Short Reach SerDes. Serial interfaces significantly reduce the total number of IOs required to communicate between semiconductor chips. They allow the organic substrate to provide the interconnection between dies and enable the use of mature System-in-Package technology.

One difficulty of the Die-to-Die approach is that no communication standard currently exists to ensure interoperability. In early 2020, the American Open Compute Project (OCP) initiative addressed the Die-to-Die standardization by launching the Open Domain Specific Architecture (ODSA) project that aims to bring more than 150 companies to collaborate on the definition of different communication standards suitable for inter-die communication.

Recommendation - The chiplet-based approach is a unique opportunity to leverage European technologies and foundries creating European HW accelerators and an interposer that could leverage European More-than-More technology developments. To achieve this, inter-operability brought by open source HW is key for the success, together with supporting tools for integration, test and validation.

The SoC infrastructure for “chiplet-based” components will require PHY and MAC layers of chiplet-to-chiplet interfaces based on standard and open source approaches. These interfaces could be adapted depending on their use: data for computing or control for security, power management, and configuration. This interposer + chiplet approach will leverage European technologies and even foundries, as the interposer does not require to be in the most advanced technology and could embed parts such as power converters or analog interfaces. The chiplets can use the most appropriate technology for each function (memory, advance processing, support chiplets and interfaces). Interoperability, that could be brought by open source HW, is key for the success, together with supporting tools for integration, test and validation.

Regarding the connection of SoCs to external devices, some serial interfaces are quite mature in the microcontroller world and there is little differentiation between vendors in the market. It would make sense to align on standard implementations and a defined set of features that can be used by different parties. Open source standard implementations could contribute to the distribution of standards. However, there are also domain specific adaptions that require special features which would make it hard to manage different implementations.

The software infrastructure necessary for a successful hardware ecosystem contains Virtual Prototypes (Instruction Accurate and Clock Accurate simulators), compilers and linkers, debuggers, programmers, integrated development environments, operating systems, software development kits and board support packages, artificial intelligence frameworks and more. Indeed, the idea of open source originates from the software world and there are already established and futureproof software projects targeting embedded systems and hardware development such as LLVM, GDB, OpenOCD, Eclipse and Zephyr. The interoperability and exchangeability between the different parts of the SW infrastructure are important fundamentals of the ecosystem.

5.5.1 Virtual Prototypes

Virtual Prototypes (VP) play a major role in different phases of the IP development and require different types of abstraction. They can range from cycle accurate models which are useful for timing and performance estimations, to instruction accurate models, applicable for software development, design-space exploration, and multi-node simulation. Independent of the VP abstraction level, these platforms should strive towards modelling the IP to be functionally as close to real hardware as possible, allowing users to test the same code they would put on the final product.

As modeling IP is usually a task less complex than taping out new hardware revisions, VPs can be effectively used for pre-silicon development. Modeling can be either done in an abstract way, or using RTL, or by mixing these two approaches in a co-simulated environment. VPs can bring benefits not only to hardware manufacturers that want to provide software support for their customers but also to customers in that they can reuse the same solutions to develop end products. Having models for corresponding open source IP could be beneficial for establishing such IP. With this in mind it would be reasonable to provide a permissively licensed solution, allowing vendors to close their non-public models.

5.5.2 Compilers and Dynamic Analysis Tools

Compilers significantly influence the performance of applications. Important open source compiler projects are LLVM (Low Level Virtual Machine) and GCC (GNU Compiler Collection). The LLVM framework is evolving to become the ‘de facto’ standard for compilers. It provides a modular architecture and is therefore a future-proof solution compared to the more monolithic GCC.

MLIR (Multi-Level Intermediate Representation) is a novel approach from the LLVM framework to building a reusable and extensible compiler infrastructure. MLIR aims to address software fragmentation, improve compilation for heterogeneous hardware, significantly reduce the cost of building domain specific compilers, and aid in connecting existing compilers together. Such flexibility on the compiler side is key to providing proper software support for the novel heterogeneous architectures made possible by the flexibility and openness provided by RISC-V. Note that both LLVM and GCC include extensions such as AddressSanitizer or ThreadSanitizer that help developers to improve code quality.

In addition, there are various separate tools such as Valgrind35 and DynamoRIO36 that strongly depend on the processor’s instruction set architecture. Valgrind is an instrumentation framework for building dynamic analysis tools to detect things like memory management and threading bugs. Similarly, DynamoRIO is a runtime code manipulation system that supports code transformations on any part of a program while it executes. Typical use cases include program analysis and understanding, profiling, instrumentation, optimization, translation, etc. For wide-spread acceptance of RISC-V in embedded systems, it is essential that such tools include support for RISC-V.

Similar to hardware components, for safety-critical applications compilers must be qualified regarding functional safety standards. Here, the same challenges and requirements exist as for hardware components. For this reason, today mainly commercial compilers are used for safety-critical applications. These compilers are mostly closed source.

5.5.3 Debuggers

In order to efficiently analyze and debug code, debuggers are needed that are interoperable with chosen processor architectures as well as with custom extensions. Furthermore, debuggers should use standard open source interface protocols such as GDB (GNU Project Debugger) so that different targets such as silicon, FPGAs or Virtual Prototypes can be seamlessly connected.

5.5.4 Operating Systems

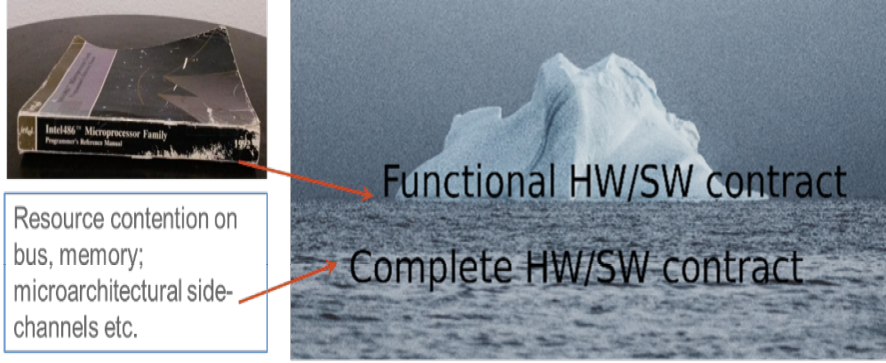

Chip design consists of many tradeoffs, and these trade-offs are best validated early by exercising the design by system software. For instance, when providing separated execution environments, it is a good idea to validate early that all relevant shared resources are separated efficiently. While this in principle should be easy it can be surprisingly difficult as highlighted by the Spectre/Meltdown vulnerabilities.

5.5.5 Real Time Operating System (RTOS)

Most of the common RTOS have already been ported to RISC-V (https://github.com/riscvarchive/riscv-software-list#real-time-operating-systems), including the most popular open source options such as FreeRTOS and Zephyr RTOS. Even Arm’s mbed has been ported to one RISC-V platform (https://github.com/GreenWaves-Technologies/mbed-gapuino-sensorboard), though mainline/wide support will most likely not happen. One of the key aspects of the OS is application portability. This can be achieved by implementing standard interfaces like POSIX which does not lock the software into a certain OS/Vendor ecosystem. There are many RTOS, but two key examples of relevance are:

Zephyr RTOS - Zephyr has been aligning with RISC-V as a default open source RTOS option. Currently RISC-V International itself is a member of the Zephyr project, along with NXP and open hardware providers Antmicro and SiFive. Zephyr is a modular, scalable RTOS, embracing not only open tooling and libraries, but also an open and transparent style of governance. One of the project’s ambitions is to have parts of the system certified as secure (details of the certification process and scheme are not yet established). It is also easy to use, providing its own SDK based on GCC and POSIX support. The RISC-V port of Zephyr covers a range of CPUs and boards, both from the ASIC and FPGA worlds, along with 32 and 64-bit implementations. The port is supported by many entities including Antmicro in Europe.

Tock - Tock provides support for RISC-V. Implemented in Rust, it is especially interesting as it is designed with security in mind, providing language-based isolation of processes and modularity. One of the notable build targets of Tock is OpenTitan. Tock relies on an LLVM-based Rust toolchain.

5.5.6 Hypervisor

A hypervisor provides virtual platform(s) to one or more guest environments. These can be bare-bone applications up to full guest operating systems. Hypervisors can be used to ensure strong separation between different guest environments for mixed criticality, such platforms also have been called Multiple Independent Level of Safety and Security (MILS) or a separation kernel37

. When a guest is a full operating system, then that guest already uses different privilege modes (such as user mode and supervisor mode). The hypervisor either modifies the guest operating system (paravirtualization) or provides full virtualization in “hypervisor” mode. RISC-V is working on extensions for hypervisor mode, although these are not yet ratified. Some hypervisors running on RISC-V are listed at https://github.com/riscvarchive/riscv-software-list#hypervisors-and-related-tools.

At the moment, a number of hypervisors for critical embedded systems exist, provided by non-European companies such as Data61/General Dynamics, Green Hills, QNX and Wind River, and in Europe by fentiss, Hensoldt, Kernkonzept, Prove & Run, Siemens and SYSGO. Many of these are being ported/or could be ported to RISC-V. A weakness is that these hypervisors usually have to assume hardware correctness. An open RISC-V platform would offer the opportunity to build assurance arguments that cover the entire hardware/software stack. Any new European RISC-V platform should be accompanied by a strong ecosystem of such hypervisors.

Recommendation - If publicly funded research in the open hardware domain makes available some core results such as the used software/hardware primitives (such as HDL designs and assembly sequences using them) under permissive or weakly-reciprocal licenses, then these results can be used by both kinds of systems.

In the field of system software such as RTOS/hypervisors, currently there are several products which are closed source and have undergone certifications for safety (e.g., IEC 61508, ISO 26262, DO-178) and security (e.g., Common Criteria), and others which have not undergone certification and are open source. The existence of value chains as closed products on top of an open source ecosystem can be beneficial for the acceptance of the open source ecosystem and is common to many ecosystems. An example is the Linux ecosystem which is used for all kinds of closed source software as well.

5.5.7 NextGen OS for Large Scale Heterogeneous Machines

To address the slowdown of Moore’s law, current large-scale machines (e.g., cloud or HPC) aggregate together thousands of clusters of dozen of cores each (scale-out). The openness of the RISC-V architecture provides multiple grades of heterogeneity, from specialized accelerators to dedicated ISA extensions, and several opportunities for scalability (e.g., large-scale cache coherency, chiplets, interconnects, etc.) to continue this trend (scale-in). However, manually managing the hardware-induced heterogeneity of the application software is complex and not maintainable.

Recommendation - There is a need for research on RISC-V flexibility to revisit how to design together hardware and operating systems in order to better hide the heterogeneity of large machines to users, taking into account potential disruptive evolutions (non-volatile memory changing the memory hierarchy, direct object addressing instead of file systems, Data Processing Units to offload data management from processors).

5.5.8 Electronic Design Automation (EDA) Tools

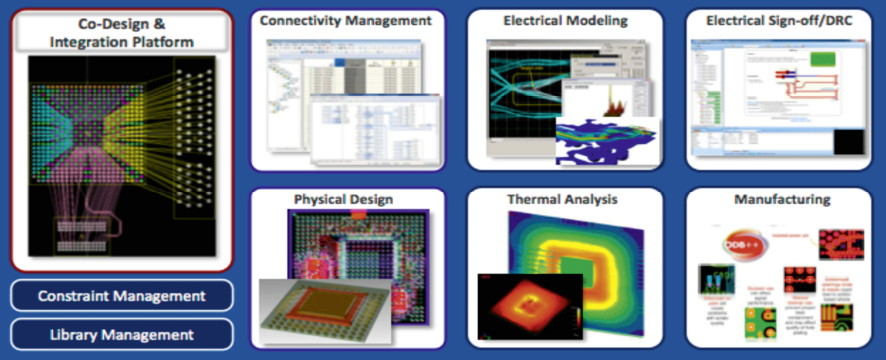

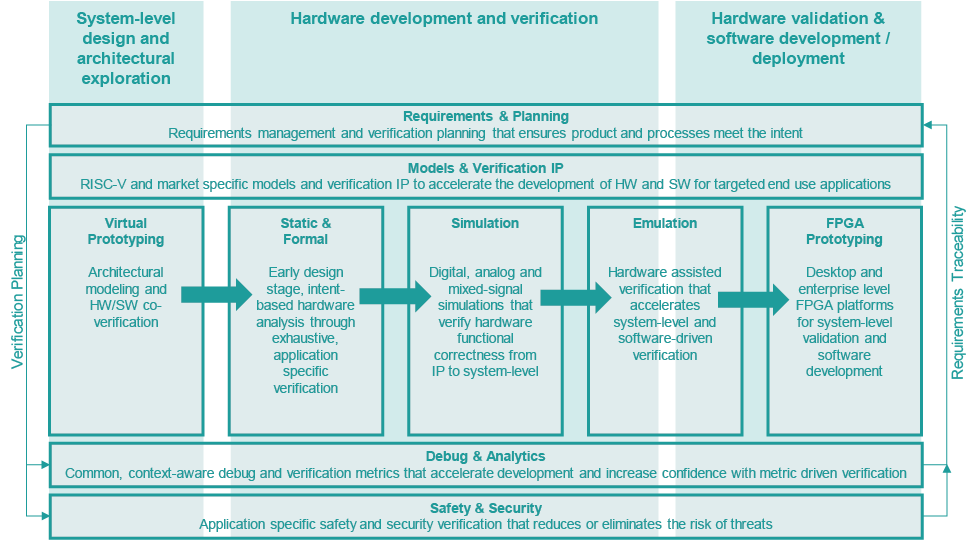

Implementing a modern design flow requires a significant amount of EDA tools as shown in Figure 5.5 – see38 which states “With billions of transistors in a single chip, state-of-the-art EDA tools are indispensable to design competitive modern semiconductors”.

Traditionally, EDA has been dominated by mostly US-based closed source commercial vendors. With projects like Verilator open source activities for specific parts of the design flow have also started to gain traction. However, open source support is still far from allowing a competitive fully open source design flow, especially when targeting digital design in advanced technologies, necessitating the co-existence of the existing commercial tools and upcoming open source ones for many years to come.

Open source tools are essential for introducing new companies and more developers into the field; especially developers with a software background who can bring in innovation in hardware-software co-design. Developers typically do not need to license their daily tools anymore and can freely work together across teams and organizations using massive collaboration hubs such as GitHub or GitLab. These benefits and capabilities need to be enabled via open source tooling for the sector to keep up with the demand for talent and innovation. A vital EDA community already exists in Europe with companies focusing on point solutions within the broader semiconductor flow. Significant investment into open source tooling as well as cross-region collaboration is needed to energize the sector. Contrary to common belief, the current EDA giants stand to benefit significantly from open source tooling investment, as there will be continued need for large, experienced players while open source solutions will enable new use cases and provide improvements in existing flows. New business opportunities will be created for the existing EDA players by incorporating new open source development. The recent acquisition of Mentor (one of the three leading EDA companies) by Siemens, means that it joins the European EDA community and can collaborate with the local ecosystem to support European sovereignty. The top EDA companies spend USD $1 Bn+ annually on R&D costs to continue innovating, so to provide meaningful progress in the open source space, continued investment from the public sector and cross-border collaboration are needed to bridge the gap. Open source EDA should be a long-term goal in order to further the European sovereignty objectives, but existing European proprietary EDA will need to be utilized when necessary in the short to mid-term due to the significant investment that would be required to create a competitive, full flow open source EDA solution.

Recommendation - Current proprietary EDA tools can help open source design IP development get off the ground if they are available at sufficiently low cost and provided the tool licensing does not restrict the permissive open source usage of the developed design IPs, but a sustained and long term investment into open source tooling is needed to build a sustainable ecosystem.

Large semiconductor and system companies use their pool of proprietary EDA licenses to design, verify and get their open source-based designs ready for manufacturing with the expected productivity and yield. However, they may also be interested to introduce software-driven innovations into parts of the flows and can benefit greatly from the economies of scale of open source, enabling large teams to use the same tools free of charge. Smaller companies and research organizations need access to a comparable level of professional EDA tools which the large EDA vendors will most likely provide through a variety of business models (Cloud offering, specific terms for start-up, research licenses, Software as a Service (SaaS), etc.). The EC is continuing to invest to build a European open source EDA tooling ecosystem, encouraging open interchange formats and making sure current tools do not introduce restrictions on utilization on open source hardware designs independently from the open source hardware license used.

Considering the safety requirements of some of the IPs under discussion here, open source offers a unique possibility to create transparent, auditable processes for ensuring safety. Much like in the case of open source software, common procedures and pooling efforts through oversight bodies (such as the Zephyr RTOS or RISC-V Safety Committees) can be used to provide safety certificates or packages to reduce the burden of safety compliance off the shoulders of developers.

The key to enabling open source tooling in the EDA space (which will most likely also benefit existing, proprietary vendors) is in enabling specific components of the proprietary flows to be replaced by open source alternatives which can introduce point innovations and savings for the users. This should be encouraged by focusing part of the EU investment on interoperability standards which could allow the mixing of open and closed ASIC tools, much like the FPGA Interchange Format driven by CHIPS Alliance is doing for FPGAs. RISC-V is another example in the hardware space of how closed and open source building blocks can coexist in the same space and reinforce each other as long as there are common standards to adhere to. EDA tools are exploited in various parts of the development chain including lifecycle management, architecture exploration, design and implementation as well as verification and validation.

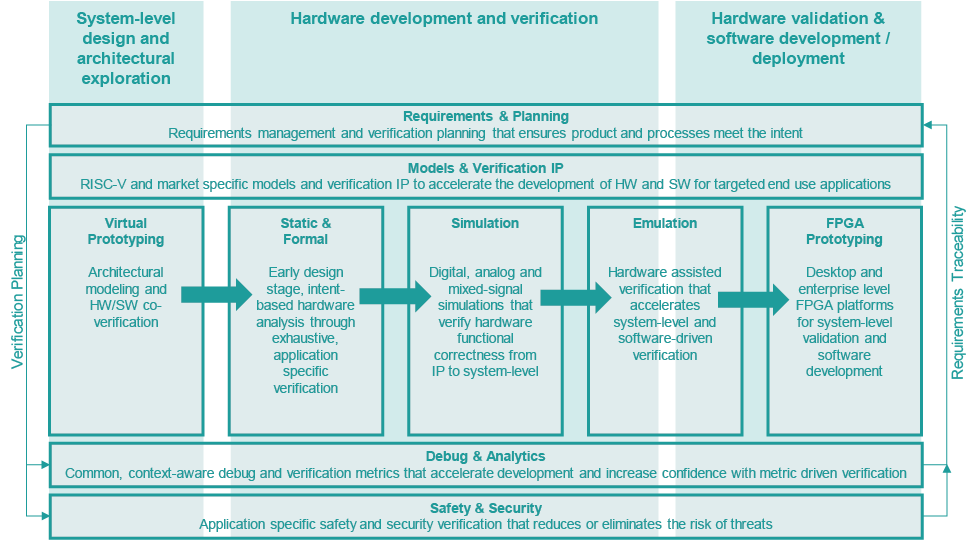

5.5.9 Lifecycle Management

A state-of-the-art development process comprising continuous integration and continuous delivery/deployment is at the core of typical projects. A key aspect is requirements traceability, both on the core and up to the system level to ensure that the verification of the requirements can be demonstrated. This is crucial for certification of safety (e.g., ISO 26262) where an IP can be used as a safety element by rigorously providing the requirements and then by ensuring that they are satisfied during integration. Another useful data point for users to decide if they should trust a particular configuration of an IP is the "proven in use" argument. This necessitates collecting data on which configurations of the IP has been taped out in given projects and the collection of related errata from the field. Here open source hardware provides the opportunity to propose better traceability metrics and methods than proprietary counterparts. Complete designs can be shared and even manufacturing information on proper processes and good practices, as well as lifecycle management for open source hardware. This does not preclude the “out of spec” use of open source hardware in other domains such as low critical applications and education.

EDA tooling needs to support tracing the standardized verification metrics from IP level to system level. To successfully span the hierarchies from core to system level, a contract-based design is crucial, allowing to share interface contracts along the supply chain. This needs language and tooling to make it accessible to architects and designers. The open source toolchains, IPs and verification suites allow scaling the continuous integration/continuous deployment systems in server infrastructure reducing the build and test time. Proprietary solutions often require dedicated licensing infrastructure effectively preventing it from being used in scalable, distributed testing infrastructure. Different licensing and/or pricing of proprietary EDA tools for open source development may help to leverage the existing technology at the early stage in the open source development, before the open source alternatives are readily available.

5.5.10 Architecture Exploration

It is crucial to make the right architecture choices for the concrete application requirements before starting down a particular implementation choice. This requires tool support to profile at a high abstraction level of the application before software is developed. Usually, hardware is first modeled as a set of parameterized self-contained abstractions of CPU cores. Additional parameterized hardware components can then be added and software can be modeled in a very abstract level, e.g. a task graph and memory that get mapped to the hardware resources such as processing units. Simulating executions on the resulting model allows analysis of the required parameters for the hardware components. This can include some first impressions on power, performance, area for given parameter sets, as well as first assessments of safety and security. The derived parameter sets of the abstract hardware models can then be used to query the existing IP databases with the standardized metrics to identify matching candidates to reach the target systems goals.

The profiling can provide Power Performance Area requirements for instructions and suggest ways of potentially partitioning between accelerators and the relevant ISA instructions can be explored to meet these requirements. Ideally, a differentiated power analysis can already be started at this level, giving a rough split between different components like compute, storage, and communication. This can then be refined the more detail.

A widely used methodology is Model-Based System Engineering (MBSE) which focuses on using modeling from requirements through analysis, design, and verification. For the application of processor cores, this would mean the use of Domain Specific Languages (DSLs) down to the ISA level to capture the hardware/software breakdown. The breakdown would then be profiled before starting implementation of any design or custom instructions on top of a core. The modeling should lend itself for use in High-Level Synthesis (HLS) flows.

Recently, thanks to the popularization of open source hardware (which pushes more software engineers into the hardware industry) as well as a fast-moving landscape of modern AI software which in turn requires new hardware approaches, more software-driven architecture exploration methods are being pursued. For example, the Custom Function Unit (CFU) group within RISC-V International is exploring various acceleration methods and tradeoffs between hardware and software. Google and Antmicro are developing a project called CFU Playground39 which allows users to explore various co-simulation strategies tightly integrated with the TensorFlow Lite ML framework to prototype new Machine Learning accelerators using Renode and Verilator.

5.5.11 Design & Implementation

Architecture exploration leads to a generic parameterized model. This requires a modeling language that offers sufficient expressiveness and ease of use for wide acceptance. A challenge is that typically engineers engaged in the architecture exploration process do not come from a hardware background and are unfamiliar with SystemC or SystemVerilog, the established hardware languages. Modern programming languages like Python or Scala are thus gaining traction as they are more widely understood. An architecture exploration process requires the availability of models and an easy way to build a virtual platform and exchange models by implementations as soon as they become available. This procedure enables early HW/SW Co-Design in a seamless and consistent manner.

A traditional Register-Transfer Level development process manually derives RTL from a specification document. To address the large scope of parameterized IPs targeted in this initiative, more automation is required. The parameterized Instruction Set Architecture (ISA) models for processors lend themselves for a high-level synthesis (HLS) flow where detailed pipeline expertise is not required by the users unless they require the highest performance. This is crucial for enabling a wider audience to design and verify processors. The design process thus lends itself to a high degree of automation, namely offering some form of HLS starting from the parameterized models. For processors, for example, synthesizing the instruction behavior from an ISA model and thus generating the RTL is feasible for a certain class of processors. The design process should also generate system documentation in terms of:

- System memory map

- Register files of each component

- Top level block diagram

- Interconnection description

The documentation needs to be created in both user and machine-readable forms allowing manual and automated verification.