2 CROSS-SECTIONAL TECHNOLOGIES

2.4

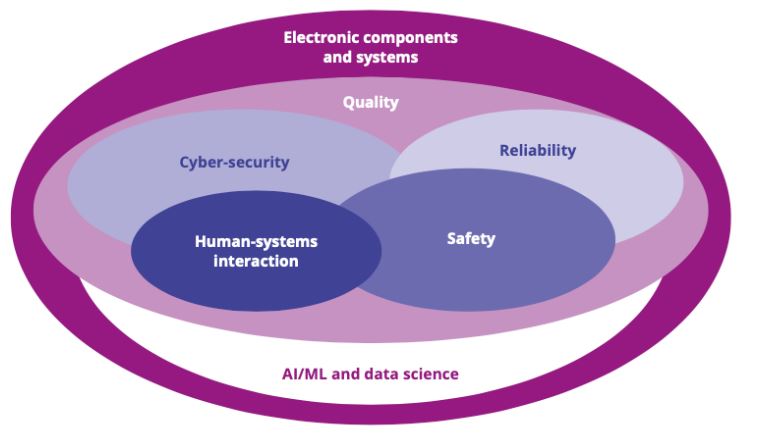

Quality, Reliability, Safety and Cyber-Security

2026 and beyond. The concurrent demand for increased functionality, uninterrupted availability of service, and continuous miniaturisation of electronic components and systems (ECS) confronts the scientific and industrial communities in Europe with many difficult challenges.

A key issue is how to enable the massive increase of computational power and contained communication latency of ECS, with its hybrid and distributed architectures, without losing crucial properties such as quality, reliability, safety, or cybersecurity. It needs to be understood what would happen if any of those properties degrade, or if they were not correctly integrated in a critical product. In addition, what role will AI (artificial intelligence) play in addressing these challenges? Are the application of the EU Artificial Intelligence Act (AIAct) and the IEC standards on AI sufficient to ensure safety and cybersecurity of an AI-driven application?

All these problems form the core background to this chapter, which investigates the quality, reliability, safety, and cybersecurity of ECS by highlighting the required industrial research directions via five major challenges.

Related chapters. Techniques described in Chapter 2.3 “Architecture and Design: Method and Tools” are complementary to the techniques presented here: in that chapter, corresponding challenges are described from the design process viewpoint, whereas here we focus on a detailed description of the challenges concerning reliability, safety, and cybersecurity within the levels of the design hierarchy.

With increasing dependence on different manufacturing nodes accelerated by advanced packaging, heterogeneous, AI and human-created software integration, the need for verification of authenticity of ingoing components (HW and SW) is crucial. Numerous reports show that counterfeits are making its way into production, but even more alarming is the risk of tampered devices that may give foreign powers back-door entry into critical systems. This is especially important for critical applications in defence, automotive, communications, etc. Here, the importance of an ability to verify and identify counterfeits and altering of semi components and electronics cannot be overestimated. This is particularly important for sub-components manufactured outside of the EU. The right tools for accurate verification ensures safe use of components in sensitive or critical environment, and that devices uphold the optimum quality and reliability, as well as making sure no tampering has been done that could lead to back-door hacking of critical systems.

Five Major Challenges have been identified:

- Major Challenge 1: Ensuring HW quality and reliability

- Major Challenge 2: Ensuring dependability in connected software

- Major Challenge 3: Ensuring cybersecurity and privacy

- Major Challenge 4: Ensuring of safety and resilience

- Major Challenge 5: Human systems integration

2.4.2.1 Major challenge 1: Ensuring HW quality and reliability

With the ever-increasing complexity and demand for higher functionality of electronics, while at the same time meeting the demands of cutting costs, lower levels of power consumption and miniaturisation in integration, hardware development cannot be decoupled from software development. Specifically, when assuring reliability, separate hardware development and testing according to the second-generation reliability methodology (design for reliability, DfR) is not sufficient to ensure the reliable function of the ECS. A third-generation reliability methodology must be introduced to meet these challenges. For the electronic smart systems used in future highly automated and autonomous systems, a next generation of reliability is therefore required. This new generation of reliability assessment will introduce in situ monitoring of the state of health on both a local (e.g. IC packaging) and system level. Hybrid prognostic and health management (PHM) supported by AI is the key methodology here. This marks the main difference between the second and the third generation. DfR concerns the total lifetime of a full population of systems under anticipated service conditions and its statistical characterisation. PHM, on the other hand, considers the degradation of the individual system in its actual service conditions and the estimation of its specific remaining useful life (RUL).

Since embedded systems control so many processes, the increased complexity by itself is a reliability challenge. Growing complexity makes it more difficult to foresee all dependencies during design. It is impossible to test all variations, and user interfaces need greater scrutiny since they have to handle such complexity without confusing the user or generating uncertainties.

The trend towards interconnected, highly automated and autonomous systems will change the way we own products. Instead of buying commodity products, we will instead purchase personalized services. The vision of Major challenge 1 is to provide the requisite tools and methods for novel ECS solutions to meet ever-increasing product requirements and provide availability of ECS during use in the field. Therefore, availability will be the major feature of ECS. Both the continuous improvement of existing methods (e.g., DfR) and development of the new techniques (PHM) will be the cornerstone of future developments in ECS. The main focus of Major challenge 1 will circulate around the following topics.

2.4.2.1.3.1 Quality: In situ and real-time assessments

- Early defect and process control based on inline time-dependent defect and digital twin monitoring.

- Implementation of inline monitoring technologies to control variability and reduce uncertainty, with special attention to the interplay between process, reliability and functional aspects.

- Automated inline monitoring (e.g. time-dependent defect and digital twin monitoring) and optimisation systems that maintain target performances at the process and product levels for known failure mechanisms. This includes in-line monitoring and fail-detection using model-based and AI-based signal processing systems, along with on-line algorithms to evaluate spatiotemporal trends.

- Construction and smart upscaling of digital twins using surrogate modelling (metamodels, surrogate) connecting basic digital twins with knowledge of failure mechanisms to achieve in-line monitoring.

- Advanced inline monitoring of advanced packaging solutions (2.5D and 3D) with high density interconnects (such as bump and hybrid bonding).

- Methods to identify process changes and the ability of decision support and automated control mechanisms to countermeasure changes.

- Assessment of AI integration into the ECS production chain, comprising process control and monitoring of inline statistical process control.

2.4.2.1.3.2 Digitalisation: A paradigm shift in the fabrication of ECS from supplier/customer to partnership

- Big data and analytics to understand manufacturing drivers of reliability, based on a more extensive collection of manufacturing data coupled with statistical and ML-based analytical tools.

- AI methods that utilise connected worldwide data to uncover mechanisms affecting quality and reliability.

- Toolsets for quick and efficient reliability reporting via automated data analysis and by utilising in-line R&D technology data, online monitoring data gathered during manufacturing and field data after deployment.

2.4.2.1.3.3 Reliability: Tests and modelling

- Extensive reliability risk mapping (risk assessment, severity and occurrence of failure modes, minimisation of reliabilities and failure modes that have not been observed before) that accounts for the decoupling between the harshness of operational environments and the standard qualification cycles by enabling boards/module/component level testing aligned with customer use conditions.

- New test methods for automotive qualification targeting new technologies/materials/processes – e.g. copper sintering, hybrid bonding, nano via, gallium nitride (GaN) and silicon carbide (SiC).

- Reliability testing, material and part characterisation at basic, component, module and system levels.

- Adopt sustainable and energy-efficient reliability testing.

- Accelerated testing solutions.

- Focused reliability tests, based on modelling and in situ monitoring of critical parameters (e.g. a specific diffusion process at a critical bond/solder).

- Prediction of time to failure using reliability models with a combination of in situ signals and models.

- Development of further advanced methods to automatically assess the reliability of new materials, devices and packages.

- Support small and medium enterprises (SMEs) in the use of accelerated lifetime assessment and in enabling failure analysis.

2.4.2.1.3.4 Design for reliability: Virtual reliability assessment prior to the fabrication of physical HW

- Lifetime prediction methods at device, package and board/system level based on monitored parameters.

- Model-driven and physics-based design for quality and reliability.

- Automated synthesis tools for reliability models of hierarchical systems that capture dataflow dependencies, virtual qualification of ECS.

- Development of infrastructure required for safe and secure information flow.

- Development of compact PoF models at the component and system level that can be executed in situ at the system level – metamodels as the basis of digital twins.

- Training and validation strategies for digital twins.

- Digital twin-based asset/machine condition prediction.

- Electronic design automation (EDA) tools to bridge the different scales and domains by integrating a virtual design flow.

- Virtual design of experiment as a best practice at the early design stage.

- Realistic material and interface characterisation depending on actual dimensions, fabrication process conditions, ageing effects, etc, covering all critical structures, generating strength data of interfaces with statistical distribution.

- Mathematical reliability models that also account for the interdependencies between the hierarchy levels (device, component, system).

- Mathematical modelling of competing and/or superimposed failure modes.

- New model-based reliability assessment in the era of automated systems.

- Development of fully harmonised methods and tools for model-based engineering across the supply chain:

- Material characterisation and modelling, including effects of ageing.

- Multi-domain physics of failure simulations.

- Reduced modelling (compact models, metamodels, etc).

- Failure criteria for dominant failure modes.

- Verification and validation techniques.

- Standardisation as a tool for model-based development of ECS across the supply chain:

- Standardisation of material characterisation and modelling, including effects of ageing.

- Standardisation of simulation-driven design for excellence (DfX).

- Standardisation of model exchange format within supply chain using functional mock-up unit (FMU) and functional mock-up interface (FMI) (and also components).

- Simulation data and process management.

- Initiate and drive standardisation process for above-mentioned points.

- Extend common design and process failure mode and effect analysis (FMEA) with reliability risk assessment features (“reliability FMEA”).

- Generic simulation flow for virtual testing under accelerated and operational conditions (virtual “pass/fail” approach).

- Automation of model build-up (databases of components, materials).

- Use of AI in model parametrisation/identification, e.g. extracting material models from measurement.

- Virtual release of ECS through referencing.

2.4.2.1.3.5 Prognostics and health management of ECS: Increase in functional safety and system availability

- Self-monitoring, self-assessment and resilience concepts for automated and autonomous systems based on the merger of PoF, data science and ML for safe failure prevention through timely predictive maintenance.

- Self-diagnostic tools and robust control algorithms validated by physical fault-injection techniques (e.g. by using end-of-life (EOL) components).

- Hierarchical and scalable health management architectures and platforms, integrating diagnostic and prognostic capabilities, from components to complete systems.

- Standardised protocols and interfaces for PHM facilitating deployment and exploitation.

- Monitoring test structures and/or monitor procedures on the component and module levels for monitoring temperatures, operating modes, parameter drifts, interconnect degradation, etc.

- Identification of early warning failure indicators and the development of methods for predicting the remaining useful life of the practical system in its use conditions.

- Development of schemes and tools using ML techniques and AI for PHM.

- Implementation of resilient procedures for safety-critical applications.

- Big sensor data management (data fusion, find correlations, secure communication), legal framework between companies and countries).

- Distributed data collection, model construction, model update and maintenance.

- Concept of digital twin: provide quality and reliability metrics (key failure indicator, KFI).

- Using PHM methodology for accelerated testing methods and techniques.

- Development of AI-supported failure diagnostic and repair processes for improve field data quality.

- AI-based asset/machine/robot life extension method development based on PHM.

- AI-based autonomous testing tool for verification and validation (V&V) of software reliability.

- Implementation of hardware-based security tokens (e.g. physical unclonable functions, true random number generators) with optimised randomness, reliability and uniqueness.

- Lifecycle management – modelling of the cost of the lifecycle.

2.4.2.2 Major Challenge 2: Ensuring dependability in connected software

Connected software applications such as those used on the Internet of Things (IoT) differ significantly in their software architecture from traditional reliable software used in industrial applications. The design of connected IoT software is based on traditional protocols originally designed for data communications for PCs accessing the internet. This includes protocols such as transmission control protocol/internet protocol (TCP/IP), the re-use of software from the IT world, including protocol stacks, web servers and the like. This also means the employed software components are not designed with dependability in mind, as there is typically no redundancy and little arrangements for availability. If something does not work, end-users are used to restarting the device. Even if it does not happen very often, this degree of availability is not sufficient for critical functionalities, and redundancy hardware and back-up plans in ICT infrastructure and network outages still continue to occur. Therefore, it is of the utmost importance that we design future connected software that is conceived either in a dependable way or can react reliably in the case of infrastructure failures to achieve higher software quality.

The vision is that networked systems will become as dependable and predictable for end-users as traditional industrial applications interconnected via dedicated signal lines. This means that the employed connected software components, architectures and technologies will have to be enriched to deal with dependability for their operation. Future dependable connected software will also be able to detect in advance if network conditions change – e.g. due to foreseeable transmission bottlenecks or planned maintenance measures. If outages do happen, the user or end application should receive clear feedback on how long the problem will last so they can take potential measures. In addition, the consideration of redundancy in the software architecture must be considered for critical applications. The availability of a European ecosystem for reliable software components will also reduce the dependence on current ICT technologies from the US and China.

2.4.2.2.3.1 Dependable connected software architectures

In the past, reliable and dependable software was always directly deployed on specialised, reliable hardware. However, with the increased use of IoT, edge and cloud computing, critical software functions will also be used that are completely decoupled from the location of use (e.g. in use cases where the police want to stop self-driving cars from a distance):

- Software reliability in the face of infrastructure instability.

- Dependable edge and cloud computing, including dependable and reliable AI/ML methods and algorithms.

- Dependable communication methods, protocols and infrastructure.

- Formal verification of protocols and mechanisms, including those using AI/ML.

- Monitoring, detection and mitigation of security issues on communication protocols.

- Quantum key distribution (“quantum cryptography”).

- Increasing software quality by AI-assisted development and testing methods.

- Infrastructure resilience and adaptability to new threats.

- Secure and reliable over-the-air (OTA) updates.

- Using AI for autonomy, network behaviour and self-adaptivity.

- Dependable integration platforms.

- Dependable cooperation of System of Systems (SoS).

This Major Challenge is tightly interlinked with the cross-sectional technology of 2.2 Connectivity Chapter, where the focus is on innovative connectivity technologies. The dependability aspect covered within this challenge is complementary to that chapter since dependability and reliability approaches can also be used for systems without connectivity.

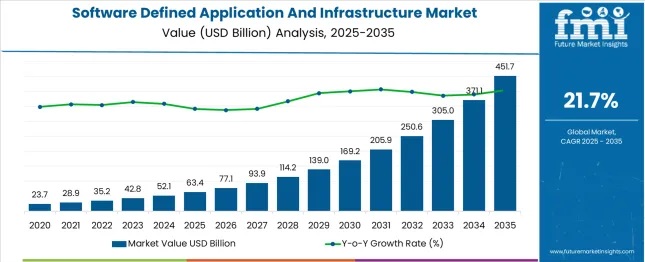

2.4.2.2.3.2 Dependable softwarisation and virtualisation technologies

Changing or updating software by retaining existing hardware is quite common in many industrial domains. However, keeping existing reliable software and changing the underlying hardware is difficult, especially for critical applications. By decoupling software functionalities from the underlying hardware, softwarisation and virtualisation are two disruptive paradigms that can bring enormous flexibility and thus promote strong growth in the market. However, the softwarisation of network functions raises reliability concerns, as they will be exposed to faults in commodity hardware and software components:

- Software-defined radio (SDR) technology for highly reliable wireless communications with higher immunity to cyber-attacks.

- Network functions virtualisation infrastructure (NFVI) reliability.

- Reliable containerisation technologies.

- Resilient multi-tenancy environments.

- AI-based autonomous testing for V&V of software reliability, including the software-in-the-loop (SiL) approach.

- Testing tools and frameworks for V&V of AI/ML-based software reliability, including the SiL approach.

2.4.2.2.3.3 Combined SW/HW test strategies

Unlike hardware failures, software systems do not degrade over time unless modified. The most effective approach for achieving higher software reliability is to reduce the likelihood of latent defects in the released software. Mathematical functions that describe fault detection and removal phenomenon in software have begun to emerge. These software reliability growth models (SRGM), in combination with Bayesian statistics, need further attention within the hardware-orientated reliability community over the coming years:

- HW failure modes are considered in the software requirements definition.

- Design characteristics will not cause the software to overstress the HW, or adversely change failure-severity consequences on the occurrence of failure.

- Establish techniques that can combine SW reliability metrics with HW reliability metrics.

- Develop efficient (hierarchical) test strategies for combined SW/HW performance of connected products.

Dependability in connected software is strongly connected with other chapters in this document. In particular, additional challenges are examined in the following chapters:

- Chapter 1.3 Embedded Software and Beyond: Major Challenge 1 (MC1) efficient engineering of software; MC2 continuous integration of embedded software; MC3 lifecycle management of embedded software; and MC6 Embedding reliability and trust.

- Chapter 1.4 System of Systems: MC1 SoS architecture; MC4 Systems of embedded and cyber-physical systems engineering; and MC5 Open system of embedded and cyber-physical systems platforms.

- Chapter 2.1 Edge Computing and Embedded Artificial Intelligence: MC1: Increasing the energy efficiency of computing systems.

- Chapter 2.2 Connectivity: MC4: Architectures and reference implementations of interoperable, secure, scalable, smart and evolvable IoT and SoS connectivity.

- Chapter 2.3 Architecture and Design: Method and Tools: MC3: Managing complexity.

2.4.2.3 Major Challenge 3: Ensuring cybersecurity and privacy

We have witnessed a massive increase in pervasive and potentially connected digital products in our personal, social and professional spheres, enhanced by new features of 5G networks and beyond. Connectivity provides better flexibility and usability of these products in different sectors, with a tremendous growth of sensitive and valuable data. Moreover, the variety of deployments and configuration options, and the growing number of sub-systems changing in dynamicity and variability, increase the overall complexity. In this scenario, new security and privacy issues should be addressed, and the continuously evolving threat landscape also be considered. These should include both software and hardware approaches towards data security. New approaches, methodologies and tools for risk and vulnerability analysis, threat modelling for security and privacy, threat information sharing and reasoning are required. Artificial intelligence (e.g. machine learning, deep learning and ontology) not only promotes pervasive intelligence supporting daily life, industrial developments, the personalisation of mass products around individual preferences and requirements, efficient and smart interaction among IoT in any type of services, but It also fosters automation to mitigate such complexity and avoid human mistakes. This is particularly relevant in critical sectors such as energy, healthcare and digital infrastructures, where the convergence of IT and OT has increased attack surfaces. In these domains, cybersecurity research must address the protection of vital assets from both conventional and hybrid attacks, approaching solutions with a dual-use perspective considering threats that could affect civilian and strategic infrastructure alike. This requires strong perimeter defence against external threat actors and advanced intrusion detection mechanisms.

Embedded and distributed AI functionality is growing at speed in both (connected) devices and services. AI-capable chips will also enable edge applications, allowing decisions to be made locally at the device level. Therefore, resilience to cyber-attacks is of utmost importance. AI can have a direct action on the behaviour of a device, possibly impacting its physical life and inducing potential safety concerns. AI systems rely on software and hardware that can be embedded in components, but also on the set of data generated and used to make decisions. Cyber-attacks, such as data poisoning or adversarial inputs, could cause physical harm and/or also violate privacy. To address this issue, adaptable protection mechanisms and robust security measures at both device and network levels should be deployed, allowing integration of current and future technologies. The development of AI should therefore go hand in hand with frameworks that assess security and safety to guarantee that AI systems developed for the EU market are safe to use, trustworthy, reliable and remain under control (c.f. Chapter 1.3 “Embedded Software and beyond” for quality of AI used in embedded software when being considered as a technology interacting with other software components). In addition, the tremendous increase of computational power and reduced communication latency of components and systems, coupled with hybrid and distributed architectures, impose a rethink on many of the “traditional” approaches and expected performances towards safety and security, exploiting AI and ML. Data protection should not only rely on complex software encryption algorithms, but also hardware-level safeguards leveraging emerging materials and techniques to protect information at that level.

Approaches for providing continuously evaluation of the compliance of systems of systems with given security standards (e.g. IEC 62443, which uses technical security controls*) will allow for the guarantee of a homogenous level of security among a multi-stakeholder ecosystem, challenging tech giants with platforms providing overall levels of security but often resulting in vendor lock-ins. Some initial approaches resulted in products like Lynis (https://cisofy.com/lynis/), which provide continuous evaluation of some (Lynis) product specific policies. However, the rise of powerful language models and code generation may allow for a dynamic creation of evaluation machinery to support evaluation of compliance against any given standard.

The combination of composed digital products and AI highlights the importance of trustable systems that weave together privacy and cybersecurity with safety and resilience. Automated vehicles, for example, are adopting an ever-expanding combination of Advanced Driver Assistance Systems (ADAS) developed to increase the level of safety, driving comfort exploiting different type of sensors, devices and on-board computers (sensors, Global Positioning System (GPS), radar, lidar, cameras, on-board computers, etc). To complement ADAS systems, Vehicle to X (V2X) communication technologies are gaining momentum. Cellular based V2X communication provides the ability for vehicles to communicate with other vehicle and infrastructure and environment around them, exchanging both basic safety messages to avoid collisions and, according to the 5g standard evolutions, also high throughput sensor sharing, intent trajectory sharing, coordinated driving and autonomous driving. The connected autonomous vehicle scenarios offer many advantages in terms of safety, fuel consumption and CO2 emissions reduction, but the increased connectivity, number of devices and automation, expose those systems to several crucial cyber and privacy threats, which must be addressed and mitigated.

Autonomous vehicles represent a truly disruptive innovation for travelling and transportation, and should be able to warrant confidentiality of the driver’s and vehicle’s information. Those vehicles should also avoid obstacles, identify failures (if any) and mitigate them, as well as prevent cyber-attacks while staying safely operational (at reduced functionality) either through human-initiated intervention, by automatic inside action or remotely by law enforcement in the case of any failure, security breach, sudden obstacle, crash, etc.

In the evoked scenario the main cybersecurity and privacy challenges deal with:

- Interoperable security and privacy management in heterogeneous systems including cyber-physical systems, IoT, virtual technologies, clouds, communication networks, autonomous systems.

- Real-time monitoring and privacy and security risk assessment to manage the dynamicity and variability of systems.

- Developing novel privacy preserving identity management and secure cryptographic solutions.

- Novel approaches to hardware security vulnerabilities and other system weaknesses, such as Spectre and Meltdown or side channel attacks.

- Developing new approaches, methodologies and tools empowered by AI in all its declinations (e.g. machine learning, deep learning, ontology).

- Investigating a deep verification approach towards also open-source hardware in synergy and implementing the security by-design paradigm.

- Investigating the interworking among safety, cybersecurity, trustworthiness, privacy and legal compliance of systems.

- Evaluating the impact in term of sustainability and green deal of the adopted solutions.

- Developing advanced data protection techniques that take advantage of unique physical phenomena – such as physical unclonable functions (PUFs) – to address the inherent vulnerabilities of conventional password-based systems to hacking.

The cornerstone of our vision rests on the following four pillars. First, a robust root of trust system, with unique identification enabling security without interruption from the hardware level right up to the applications, including AI, involved in the accomplishment of the system’s mission in dynamic unknown environments. This aspect has a tremendous impact on mission critical systems with lots of reliability, quality and safety and security concerns. Second, protection of the EU citizen’s privacy and security while at the same keeping usability levels and operation in a competitive market where also industrial Intellectual Protection should be considered. Third, the proposed technical solutions should contribute to the green deal ambition – for example, by reducing their environmental impact. Finally, proof-of-concept demonstrators that are capable of simultaneously representing the growth of the complexity in modern SoS ecosystems, ensuring the correct level of safety and privacy against cyber-attacks in real-world autonomous systems (e.g. with an emphasis on autonomous and driverless vehicles). The vision is based on the following points:

- Identification of threats based on a quantitative severity and probability, the goal being to mitigate these threats with a clear risk management strategy.

- Efficient techniques for the detection of cyber-attacks and anomalies.

- Adoption of resilient techniques on devices, networks and systems for mitigating the effect of cyber-attacks, from device level (e.g. embedded network) and high-level data-flow network (e.g. associated with SoS or DSoS) to the design of proper hardware.

- New certification methods, tools and demonstrators for heterogeneous systems to build trust, reliability and transparency for safety and security assurance, that respects end user privacy.

- Tracking of ethical usage of personal data and implicit information – for example, tracking driving styles if vehicles share information to mitigate traffic congestion.

- Tools and techniques for detecting/mitigating/defending against hybrid attacks (e.g. security/safety; security/privacy and vice versa), but also investigating the synergic contribution to the concept of trustworthiness of the system when quality, reliability, safety and security are taken into consideration.

- Models, analyses, methods and security frameworks for security-by-design and privacy-by-design approaches that allow measurable properties of the system and thus identify quality metrics.

- Mechanisms to control levels of trust amongst people and systems and to assess the ethical impact of systems created with collaborative AI. When several devices collaborate, they should be able to assess the level of trust in each other before collaborating.

- Preventing malicious AI models and trustworthy AI model criteria, to identify AI models which have been tweaked to respond inaccurately or with biases.

- Interplay between security, privacy, and safety, to identify when some security properties can become a safety or privacy issue and vice versa. Define methods able to assess resilient safety and security systems, where if required, quality is acceptable even when security/privacy are degraded.

- Ensuring the trustworthiness of software and hardware components, protocols, AI and data used for safety-critical applications.

2.4.2.3.3.1Trustworthiness

- Interoperable security and privacy management over heterogeneous systems (including cyber-physical systems, IoT virtual technologies, clouds, communication networks, autonomous systems).

- Security certification of heterogeneous systems that run different software (e.g. AI), including certification of hardware with multi-core CPUs for security.

- Safe fault response in case of cyber-attacks or damage.

- Evaluation of cybersecurity and privacy risks and impacts.

- Security certification of heterogeneous systems that need to run different software (e.g. AI), including certification of heterogeneous computing platforms.

- Design of dependable AI-based systems also considering security threats.

- Provision of cooperation models and trust evaluation for AI agents, enabling robust collaboration by accurate assessment of trustworthiness and capabilities of partners.

- Incorporating the safety requirements into the cybersecurity assessment framework.

2.4.2.3.3.2 Security and privacy-by-design

- Security and privacy-by-design methods.

- Data storage security, privacy protection strategies for the cloud, edge and fog computing, including in particular those when AI/ML is in place.

- Secure lifecycle of system/AI and security certification.

- Security and privacy-aware applications and hardware design.

- Automated monitoring for network anomalies and threats, for example via AI-based network log analysis.

- Development of methods and technologies for resilient memory devices.

- Development of novel solutions for digital product passports that meet anti-counterfeiting requirements.

2.4.2.3.3.3 Ensuring both safety and security properties

2.4.2.4 Major Challenge 4: Ensuring of safety and resilience

Continually increasing the safety and resilience of ECS calls for continuous industrial research. ECS are at the core of many products used in everyday life, industrial applications, and the overall infrastructure. However, the landscape for safety-critical systems is evolving, with new methodologies and regulations such as the European Union AI Act, ECES R&D (intelligence) and IEC publications for AI in safety critical domains. These frameworks emphasise risk-based classification, transparency, robustness, traceability, and fairness – all key requirements for AI-integrated systems.

At the same time, the ECS domain faces new challenges driven by advances in AI and the growing complexity of interconnected systems. The question is: to what extent can we leverage AI technologies in safety-critical environments, and what hurdles demand our attention to be resolved?

The recent spread of AI applications is forcefully and naturally making its way into the digitalisation of ubiquitous electronic components and systems (ECS), and stretches traditional safety analysis. To govern this, in the last couple of years the international community has published and updated a series of standards, technical specifications and regulations related to safety and AI.

In 2024, the European Parliament and the Council of the European Union published the EU AI Act. Although this regulation does not apply to “any research, testing or development activity regarding AI systems or AI models” [art. 8], it indirectly applies to industrial research if the product is deployed on the market. Moreover, the AI Act highlights the importance of guidelines and principles for safety, linking this latter to the risk-based AI classification system as well as to robustness, resilience, transparency, traceability and explainability [e.g. art. 27].

At the same time, the international standardisation group ISO/IEC JTC 1 42 published a set of standards and technical specifications that are focused on being domain-independent applications. Even in the nuclear domain, and certainly among the most restrictive and conservative ones, the related industrial community is investigating, not without a live internal debate, on how to use AI-based techniques, identify the limits and to study their impacts on safety (see, e.g., the activities and works of IEC TC 45 SC 45A and the International Atomic Energy Agency).

The current landscape of regulations and standardisations concerning safety and AI underlines the need for ongoing industrial research into the safety and resilience of ECS, to deliver answers and innovations. This major challenge is then devoted to understanding and developing innovations that are required to increase the safety and resilience of systems in compliance with the AI Act and other related standards – by tackling key focus areas involving cross-cutting considerations such as legal concerns and user abilities, and to ensure safety-related properties under a chiplet-based approach (cf. Introduction).

The vision points to the development of safe and resilient autonomous systems in dynamic environments, with a continuous chain-of-trust from the hardware level up to the applications that is involved in the accomplishment of the system’s mission. Our vision considers physical limitations (battery capacity, quality of sensors used in the system, hardware processing power needed for autonomous navigation features, etc), interoperability (that could be brought, for example, via open-source hardware), and considers optimising the energy usage and system resources of safety-related features to support sustainability of future systems.

Civilian applications of (semi-)autonomous mobile systems are increasing significantly. This trend represents a great opportunity for European economic growth. However, unlike traditional high-integrity systems, the hypothesis that only expert operators can manipulate the final product undermines the large-scale adoption of the new generation of autonomous systems.

Civilian applications thus inherently entail safety, and in the case of an accident or damage (for example, in uploading a piece of software to an AI system) liability should be clearly traceable, as well as the certification/qualification of AI systems.

In addition to the key focus areas below, the challenges cited in Chapter 2.3 on Architecture and Design: Methods and Tools are also highly relevant for this topic, as are those in Chapter 1.3 on Embedded Software and Beyond

2.4.2.4.3.1 Dynamic adaptation and configuration, self-repair capabilities, (decentralised instrumentation and control for) resilience of complex and heterogeneous systems

The expected outcome is systems that are resilient under physical constraints, and are able to dynamically adapt their behaviour in dynamic environments:

- Responding to uncertain information based on digital twin technology, run-time adaption and redeployment based on simulations and sensor fusion.

- Automatic prompt self-adaptability at low latency to dynamic and heterogeneous environments.

- Architectures, including but not limited to the RISC-V ones that support distribution, modularity and fault containment units to isolate faults, possibly with run-time component verification.

- Use of AI in the design process – e.g. using ML to learn fault injection parameters and test priorities for test execution optimisation.

- Develop explainable AI models for human interaction, systems interaction and certification.

- Resource management of all systems’ components to accomplish the mission system in a safe and resilient way. Consideration should be on the minimalisation of energy usage and system resources of safety-related features to support sustainability of future cyber-physical systems.

- Identify and address transparency and safety-related issues introduced by AI applications.

- Support for dependable dynamic configuration and adaptation/maintenance to help cope with components that appear and disappear, as ECS devices to connect/disconnect, and communication links that are established/released depending on the actual availability of network connectivity (including, for example, patching) to adapt to security countermeasures.

- Concepts for SoS integration, including legacy system integration.

2.4.2.4.3.2 Modular certification of trustable systems and liability

The expected outcome is clear traceability of liability during integration and in the case of an accident:

- Having explicit workflows for automated and continuous layered certification/qualification, both when designing the system and for checking certification/qualification during run-time or dynamic safety contracts, to ensure continuing trust in dynamic adaptive systems under uncertain and/or dynamic environments.

- Concepts and principles, such as contract-based co-design methodologies, fault identification and failure analysis (FIFA), and consistency management techniques in multi-domain collaborations for trustable integration.

- Certificates of extensive testing, new code coverage metrics (e.g. derived from mutation testing), and formal methods providing guaranteed trustworthiness.

- Having a workflow for guarantying compliance to AI Act.

Ensuring trustworthy electronics, including trustworthy design IPs (e.g. source code, documentation, verification suites) developed according to auditable and certifiable development processes, which give high verification and certification assurance (safety and/or security) for these IPs.

2.4.2.4.3.3 Safety aspects related to the human/system interaction

The expected outcome is to ensure safety for the human and environment during the nominal and degraded operations in the working environment (cf. Major Challenge 5 below):

- Understanding the nominal and degraded behaviour of a system, with/without AI functionality.

- Minimising the risk of human or machine failures during the operating phases.

- Ensuring that the human can safely interface with machine in complex systems and SoS, and also that the machine can prevent unsafe operations.

- New self-learning safety methods to ensure safety system operations in complex systems.

- Ensuring safety in machine-to-machine interaction.

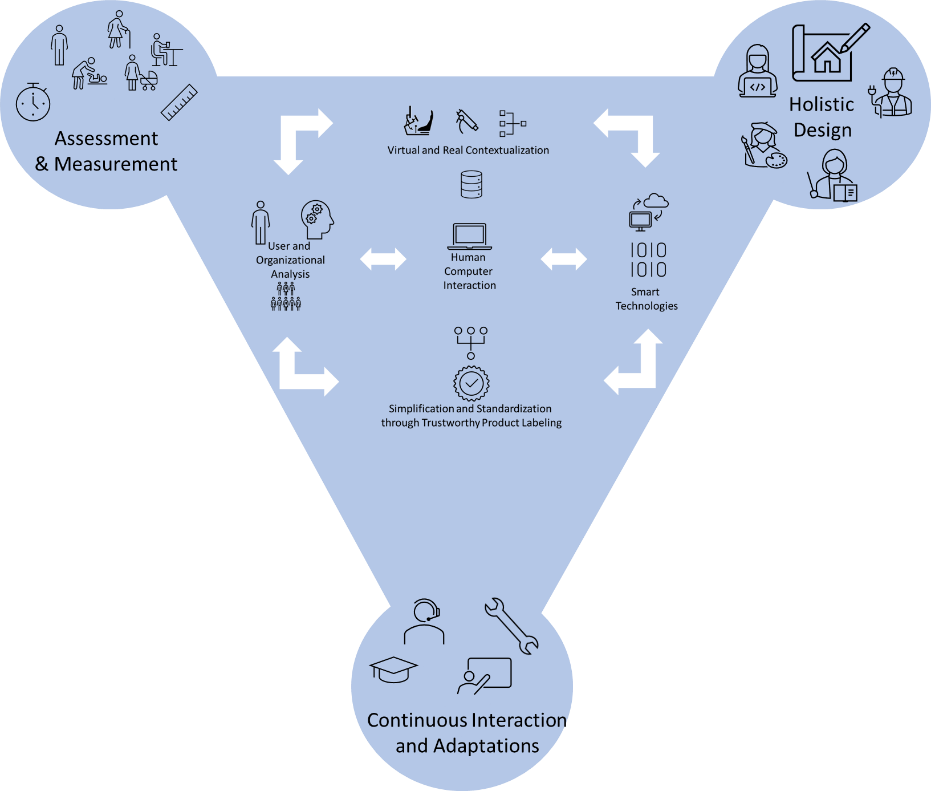

2.4.2.5 Major Challenge 5: Human systems integration

This ECS SRIA roadmap aligns the RD&I for electronic components to societal needs and challenges. The societal benefits thereby motivate the foundational and cross-sectional technologies as well as the concrete applications in the research agenda. Thereby, many technological innovations occur on a subsystem level that are not directly linked to societal benefits themselves until assembled and arranged into larger systems. Such larger systems then most of the time require human users and beneficiary to utilise them and thereby achieve the intended societal benefits. Thereby, it is common that during the subsystem development human users and beneficiaries stay mostly invisible. Only once subsystems are assembled and put to an operational system, the interactions with a human user become apparent. At this point however, it is often too late to make substantial changes to the technological subsystems and partial or complete failure to reach market acceptance and intended societal benefits can result. To avoid such expensive and resource intensive failures, Human Systems Integration (HSI) efforts attempt to accompany technological maturation that is often measured as Technological Readiness Levels (TRL) with the maturation of Human Readiness Levels (HRL). Failures to achieve high HRL beside high TRLs have been demonstrated in various domains such as military, space travel, and aviation. Therefore, HSI efforts to achieve high HRLs need to be appropriately planned, prepared, and coordinated as part of technological innovation cycles. As this is currently only rarely done in most industrial R&D activities, this chapter describes the HSI challenges and outlines a vision to address them.

HSI aims to align technological innovation with human needs, requirements, and capabilities. Unfortunately, there is no clear-cut general solution for HSI as its specific requirements depend on the application domain. Therefore, technological innovations need to involve human factors at all stages from the conceptualisation of an idea to the operation of a deployed system. This aligns with methods from Human Computer Interaction (HCI) and Human Factors discipline. HSI therefore does not require the invention of entirely new methods, but rather the adaptation of existing methods into the context of the research, development, and innovation of (electronic) system engineering. An operational HSI effort thereby requires solid organisational structures for coordinating domain and system-wide factors affected by human users and beneficiaries. Thereby, HSI operations should be planned, resourced, and staffed on the same level as technological advances and, as such, form an integral aspect of systems engineering. Only then can HSI reach its objective to be part of a holistic system design that ensures technical, social, ethical, legal, organisational, and economic aspects align with each other.

2.4.2.5.3.1 Conceptual understanding of human user and human beneficiaries

The expected outcome is greater alignment of expectations of human users and human beneficiaries with the addressed problems of technological systems

- Understanding stakeholder ecosystems and societal consequences.

- Understanding roles within contexts based on user archetypes and usage scenarios.

- Understanding user and beneficiary abilities and disabilities including central and peripheral perception and cognition as well as physical and cognitive abilities and limitations.

2.4.2.5.3.2 A framework for describing and measuring HRL

The expected outcome is a reliable modelling and assessment of human readiness levels

- Development of standardised frameworks that allow detailed and comparable descriptions of human readiness levels.

- Improved methods for modelling and measuring human readiness levels.

- Standardisation and normalisation of HRL.

2.4.2.5.3.3 Human-centred design and evaluation

The expected outcome is robust methods and tools for human-centred design and evaluation

- Development of frameworks for human factors that can be included during the design.

- Evaluation and improvement of current methods for user-centred design.

- Application-specific standardisation of HSI.

- Improved methods for human factors testing and validation.

2.4.2.5.3.4 Integration of human factors into systems engineering

The expected outcome is enhanced technology adoption by integrating human factors into system engineering

- Development of methods to integrate human factors from early stages into system engineering.

- Methods for human factors project management on the same level as technical project management.

- Methods to quantify the impact of HRL to systems.

- Method development to convert HRL into TRL.

2.4.2.5.3.5 Technical and organisational tools and infrastructure for HSI

The expected outcome is better technical support and organisational processes for HSI

- Development of tooling and infrastructure for capturing, storing, and providing human factors data across the R&D lifecycle. This includes gathering real-world human-technology interactions in realistic operational environments for evaluating the operational HRL.

- Embedding HSI into safety/security processes, and regulatory compliance tools.

- Methods and tools to ensure longitudinal HSI.

- Development of HSI verification and validation tools and methods.

- Development of system design tools that embed HSI aspects.

- Systematise methods for user, context, and environment assessments, and the sharing of information for user-requirement generation. Such methods are necessary to allow user-centred methods to achieve an impact on overall product design.

- Develop simulation and modelling methods for the early integration of humans and technologies. The virtual methods link early assessments, holistic design activities, and lifelong product updates, and bring facilitate convergence among researchers, developers and stakeholders.

- Establish multi-disciplinary research and development centres and sandboxes. Interdisciplinary research and development centres allow for the intermingling of experts and stakeholders for cross-domain coordinated products and life-long product support.

| Major Challenge | Topic | Short Term (2026–2030) | Medium Term (2031–2035) | Long Term (2036+) | Notes |

|---|---|---|---|---|---|

| Major Challenge 1: Ensuring HW quality and reliability | Topic 1.1: quality: in situ and real-time assessments | Create an environment to fully exploit the potential of data science to improve efficiency of production through smart monitoring to facilitate the quality of ECS and reduce early failure rates | Establish a procedure to improve future generation of ECS based on products that are currently in the production and field → feedback loop from the field to design and development | Provide a platform that allows for data exchange within the supply chain while maintaining IP rights | |

| Topic 1.2: reliability: tests and modelling | Development of methods and tools to enable the third generation of reliability – from device to SoS | Implementation of a novel monitoring concept that will empower reliability monitoring of ECS | Identification of the 80% of all field-relevant failure modes and mechanisms for the ECS used in autonomous systems | ||

| Topic 1.3: design for (EoL) reliability: virtual reliability assessment prior to the fabrication of physical HW | Continuous improvement of EDA tools, standardisation of data exchange formats and simulation procedures to enable transfer models and results along full supply chain | Digital twin as a major enabler for monitoring of degradation of ECS | AI/ML techniques will be a major driver of model-based engineering and the main contributor to shortening the development cycle of robust ECS | ||

| Topic 1.4: PHM of ECS: increase in functional safety and system availability | Condition monitoring will allow for identification of failure indicators for main failure modes | Hybrid PHM approach, including data science as a new potential tool in reliability engineering, based on which we will know the state of ECS under field loading conditions | Standardisation of PHM approach along all supply chains for distributed data collection and decision-making based on individual ECS | ||

| Major Challenge 2: Ensuring dependability in connected software | Topic 2.1: dependable connected software architectures | Development of necessary foundations for the implementation of dependable connected software to be extendable for common SW systems (open source, middleware, protocols) | Set of defined and standardised protocols, mechanisms and user-feedback methods for dependable operation | Widely applied in European industry | |

| Topic 2.2: dependable softwarisation and virtualisation technologies | Create the basis for the increased use of commodity hardware in critical applications | Definition of softwarisation and virtualisation standards, not only in networking but in other applications such as automation and transport | Efficient test strategies for combined SW/HW performance of connected products | ||

| Topic 2.3: combined SW/HW test strategies | Establish SW design characteristics that consider HW failure modes | Establish techniques that combine SW reliability metrics with HW reliability metrics | |||

| Major Challenge 3: Ensuring privacy and cybersecurity | Topic 3.1: trustworthiness | Root of trust system, and unique identification enabling security without interruption from the hardware level up to applications, including AI | Definition of a framework providing guidelines, good practices and standards oriented to trust | Developing rigorous methodology supported by evidence to prove that a system is secure and safe, thus achieving a greater level of trustworthiness | |

| Topic 3.2: security and privacy-by-design | Establishing a secure and privacy-by-design European data strategy and data sovereignty | Ensuring the protection of personal data against potential cyber-attacks... ensuring performance and AI development by guaranteeing GDPR compliance | Provide a platform that allows for data exchange within the supply chain while maintaining IP rights | ||

| Topic 3.3: ensuring both safety and security properties | Guaranteeing information properties under cyber-attacks (quality, coherence, integrity, reliability, etc.) independence, geographic distribution, emergent behaviour and evolutionary development | Ensuring the nominal and degraded behaviour of a system when underlying security is breached; evaluating contextualisation effects on safety/security | Identification of the 80% of all field-relevant failure modes and mechanisms for the ECS used in autonomous systems | ||

| Major Challenge 4: Ensuring safety and resilience | Topic 4.1: safety and resilience of (autonomous AI) systems in dynamic environments | Resource management of all system components... Use of AI in design (e.g., ML for fault injection optimisation) | Apply methods for user context/environment assessments and sharing of information for stakeholder requirement generation | Develop standard processes for stakeholder context and environment assessments; develop educational programmes to increase common stakeholder competences | |

| Topic 4.2: modular certification of trustable systems and liability | Contract-based co-design methodologies; consistency management techniques in multi-domain collaborations | Definition of a strategy for modular certification under uncertain environments; consolidation of frameworks for trust; ensuring compliance with AI standards | Ensuring liability | ||

| Topic 4.3: dynamic adaptation and configuration, self-repair capabilities, resilience of complex systems | Support for dependable dynamic configuration; SoS integration including legacy systems; fault injection, digital twin, sensor fusion; architectures supporting modularity and fault containment | Guaranteeing a system’s coherence while considering different requirements and applied solutions across phases | |||

| Topic 4.4: safety aspects related to HCI | Minimising risk of human/machine failures; ensuring safe human–machine interaction; ensuring safety in machine-to-machine interaction | Develop prototypical use cases enabling interdisciplinary collaboration for cross-domain coordinated products and lifelong support | |||

| Major Challenge 5: Human–systems integration | Topic 5.1: skills & competences for user/context/environment assessments | Establish research lighthouses; investigate necessary knowledge, skills and practices; establish stakeholder competence-capturing techniques | Develop policy recommendations for education, tools, processes, and organisational prerequisites to promote HSI | Develop policies, standards, funding schemes, excellence centres, and organisation certifications to promote HSI | |

| Topic 5.2: simulation and modelling for early integration of humans and technologies | Create tools linking early assessments, holistic design, product updates; establish stakeholder competence-capturing tools; quantify human acceptance/trust; build behavioural metric databases | Establish centres of excellence for HSI; international harmonisation; from mid-term merge with Topic 5.3 | Establish holistic design and systemic thinking in European technical and social-science education programmes | ||

| Topic 5.3: multi-disciplinary R&D centres and sandboxes | Establish interdisciplinary research centres; establish tools/processes to update stakeholder competences | Centres of excellence for HSI (merged with Topic 5.2 mid-term) | Education and training programmes to promote knowledge from centres of excellence |